The late 1950s were such an optimistic time in America. World War II had been over for less than a decade, the economy boomed thanks to pent-up demand after years of privation, and everyone was having babies — so many babies. The sky was the limit, especially with new technologies that promised a future filled with miracles, including abundant nuclear power that would be “too cheap to meter.”

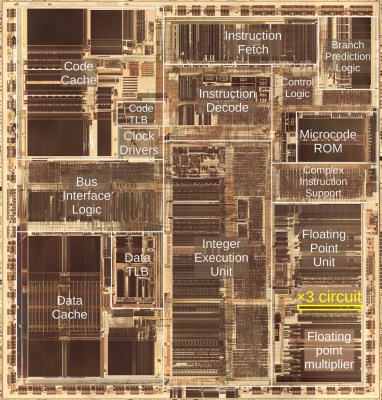

It didn’t quite turn out that way, of course, but the whole “Atoms for Peace” thing did provide the foundation for a lot of innovations that we still benefit from to this day. This 1958 film on “The Armour Research Reactor” details the construction and operation of the world’s first privately owned research reactor. Built at the Illinois Institute of Technology by Atomics International, the reactor was a 50,000-watt aqueous-homogenous design using a solution of uranyl sulfate in distilled water as its fuel. The core is tiny, about a foot in diameter, and assembled by hand right in front of the camera. The stainless steel sphere is filled with 90 feet (27 meters) of stainless tubing to circulate cooling water through the core. Machined graphite reflector blocks surrounded the core and its fuel overflow tank (!) before the reactor was installed in “biological shielding” made from super-dense iron ore concrete with walls 5 feet (1.5 m) thick — just a few of the many advanced safety precautions taken “to ensure completely safe operation in densely populated areas.”

While the reactor design is interesting enough, the control panels and instrumentation are what really caught our eye. The Fallout vibe is strong, including the fact that the controls are all right in the room with the reactor. This allows technicians equipped with their Cutie Pie meters to insert samples into irradiation tubes, some of which penetrate directly into the heart of the core, where neutron flux is highest. Experiments included the creation of radioactive organic compounds for polymer research, radiation hardening of those new-fangled transistors, and manufacturing radionuclides for the diagnosis and treatment of diseases.

This mid-century technological gem might look a little sketchy to modern eyes, but the Armour Research Reactor had a long career. It was in operation until 1967 and decommissioned in 1972, and similar reactors were installed in universities and private facilities all over the world. Most of them are gone now, though, with only five aqueous-homogenous reactors left operating today.

Continue reading “Retrotechtacular: Better Living Through Nuclear Chemistry”