The use of brainwaves as control parameters for electronic systems is becoming quite widespread. The types of signals that we have access to are still quite primitive compared to what we might aspire to in our cyberpunk fantasies, but they’re a step in the right direction.

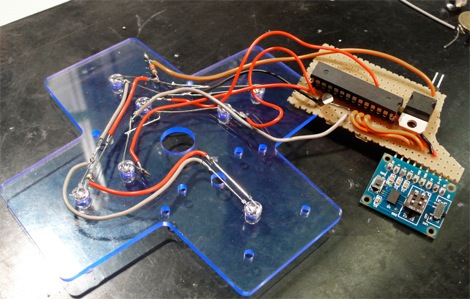

A very tempting aspect of accessing brain signals is that it can be used to circumvent physical limitations. [Jerkey] demonstrates this with his DIY brain-controlled electric wheelchair that can move people who wouldn’t otherwise have the capacity to operate joystick controls. The approach is direct, using a laptop to marshall EEG data which is passed to an arduino that simulates joystick operations for the control board of the wheelchair. From experience we know that it can be difficult to control EEGs off-the-bat, and [Jerky]’s warnings at the beginning of the instructable about having a spotter with their finger on the “off” switch should well be followed. Maybe some automated collision avoidance would be useful to include.

We’ve covered voice-operated wheelchairs before, and we’d like to know how the two types of control would stack up against one another. EEGs are more immediate than speech, but we imagine that they’re harder to control.

It would be interesting albeit somewhat trivial to see an extension of [Jerkey]’s technique as a way to control an ROV like Oberon, although depending on the faculties of the operator the speech control could be difficult (would that make it more convincing as an alien robot diplomat?).