There’s tons of theory out there to explain the behavior of electronic circuits and electromagnetic waves. When it comes to visualization though, most of us have had to make do with our lecturer’s very finest blackboard scribbles, or some diagrams in a textbook. [Sam A] has been working on some glorious animated simulations, however, which show us various phenomena in a far more intuitive way.

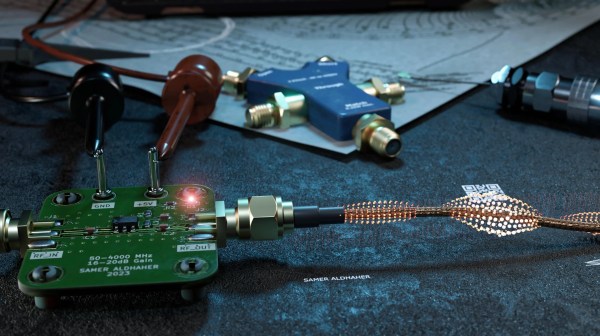

The animations were created in Blender, the popular 3D animation software. As for the underlying simulation going on behind the scenes, this was created using the openEMS platform. [Sam] has used openEMS to run electromagnetic simulations of simple circuits via KiCAD. From there, it was a matter of finding a way to export the simulation results in a way that could be imported into Blender. This was achieved with Paraview software acting as a conduit, paired with a custom Python script.

The result is that [Sam] can produce visually pleasing electromagnetic simulations that are easy to understand. One needn’t imagine a RF signal’s behaviour in a theoretical coax cable with no termination, when one can simply see what happens in [Sam]’s animation.

Simulation is a powerful tool which is often key to engineering workflows, as we’ve seen before.

Continue reading “Blender And OpenEMS Teamed Up Make Stunning Simulations”