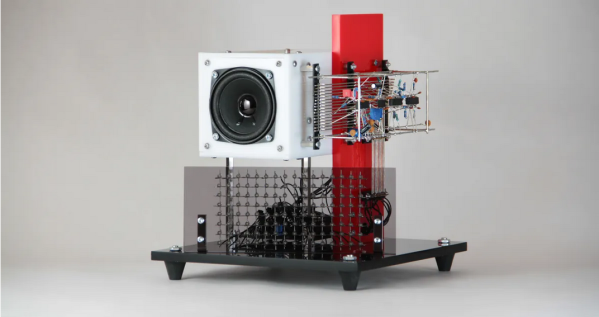

If you want to dip your toes into the deep, deep water of synth DIY but don’t know where to start, [Atarity] has just the resource for you. He’s compiled a list of 70 wonderful DIY synth and noise-making projects and put them all in one place. And as connoisseurs of the bleepy-bloopy ourselves, we can vouch for his choices here.

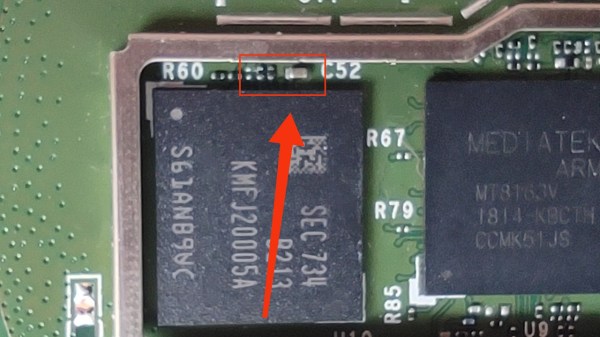

The collection runs the gamut from [Ray Wilson]’s “Music From Outer Space” analog oddities, through faithful recreations like Adafruit’s XOXBOX, and on to more modern synths powered by simple microcontrollers or even entire embedded Linux devices. Alongside the links to the original projects, there is also an estimate of the difficulty level, and a handy demo video for every example we tried out.

Our only self-serving complaint is that it’s a little bit light on the Logic Noise / CMOS-abuse side of synth hacking, but there are tons of other non-traditional noisemakers, sound manglers, and a good dose of musically useful devices here. Pick one, and get to work!