Pitching a baseball is about accuracy and speed. A swift ball on target is the goal, allowing the pitcher to strike out the batter. [Nick Bild] created an AI system that can determine a ball’s trajectory in mid-flight, based on a camera feed.

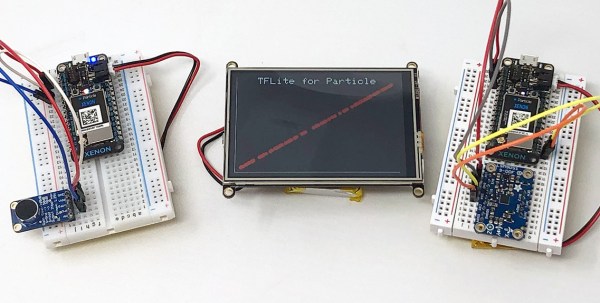

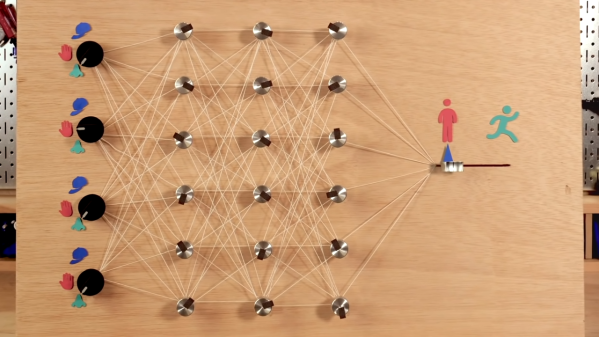

The system uses an NVIDIA Jetson AGX Xavier, fitted with a USB camera running at 100FPS. A Nerf tennis ball launcher is used to fire a ball towards the batter. Once triggered, the AI uses the camera to capture two successive images of the ball in flight. These images are fed into a convolutional neural network (CNN), and the software determines whether the ball is heading for the strike zone, or moving off-target. It uses this information to light a green or red LED respectively to alert the batter.

While such a system is unlikely to appear in professional baseball anytime soon, it shows the sheer capability of neural network systems to quickly and effectively analyse data in ways simply impossible for mere humans. [Nick]’s future goals involve running the system on faster hardware, and expanding it to determine effects like spin and more accurate positioning within the strike zone.

We’ve seen CNNs do everything from naming tomatoes to finding parking spaces. Video after the break.

Continue reading “AI Knows If The Pitch Is On Target Before You Do”