It’s Nobel season again, with announcements of the prizes in literature, economics, medicine, physics, and chemistry going to worthies the world over. The wording of the Nobel citations are usually a vast oversimplification of decades of research and end up being a scientific word salad. But this year’s chemistry Nobel citation couldn’t be simpler: “For the development of lithium-ion batteries”. John Goodenough, Stanley Whittingham, and Akira Yoshino share the prize for separate work stretching back to the oil embargo of the early 1970s, when Goodenough invented the first lithium cathode. Wittingham made the major discovery in 1980 that adding cobalt improved the lithium cathode immensely, and Yoshino turned both discoveries into the world’s first practical lithium-ion battery in 1985. Normally, Nobel-worthy achievements are somewhat esoteric and cover a broad area of discovery that few ordinary people can relate to, but this is one that most of us literally carry around every day.

What’s going on with Lulzbot? Nothing good, if the reports of mass layoffs and employee lawsuits are to be believed. Aleph Objects, the Colorado company that manufactures the Lulzbot 3D printer, announced that they would be closing down the business and selling off the remaining inventory of products by the end of October. There was a reported mass layoff on October 11, with 90 of its 113 employees getting a pink slip. One of the employees filed a class-action suit in federal court, alleging that Aleph failed to give 60 days notice of terminations, which a company with more than 100 employees is required to do under federal law. As for the reason for the closure, nobody in the company’s leadership is commenting aside from the usual “streamlining operations” talk. Could it be that the flood of cheap 3D printers from China has commoditized the market, making it too hard for any manufacturer to stand out on features? If so, we may see other printer makers go under too.

For all the reported hardships of life aboard the International Space Station – the problems with zero-gravity personal hygiene, the lack of privacy, and an aroma that ranges from machine-shop to sweaty gym sock – the reward must be those few moments when an astronaut gets to go into the cupola at night and watch the Earth slide by. They all snap pictures, of course, but surprisingly few of them are cataloged or cross-referenced to the position of the ISS. So there’s a huge backlog of beautiful but unknown cities around the planet that. Lost at Night aims to change that by enlisting the pattern-matching abilities of volunteers to compare problem images with known images of the night lights of cities around the world. If nothing else, it’s a good way to get a glimpse at what the astronauts get to see.

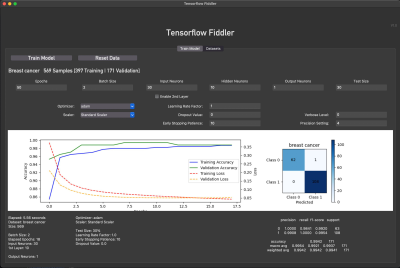

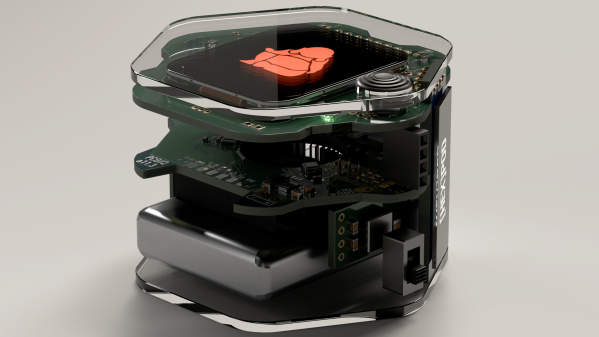

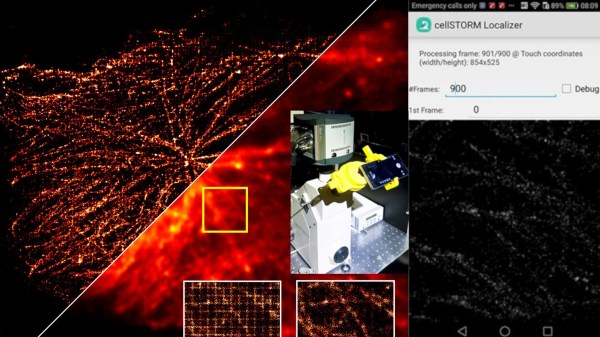

Which Pi is the best Pi when it comes to machine learning? That depends on a lot of things, and Evan at Edje Electronics has done some good work comparing the Pi 3 and Pi 4 in a machine vision application. The SSD-MobileNet model was compiled to run on TensorFlow, TF Lite, or the Coral USB accelerator, using both a Pi 3 and a Pi 4. Evan drove around with each rig as a dashcam, capturing typical street scenes and measuring the frame rate from each setup. It’s perhaps no surprise that the Pi 4 and Coral setup won the day, but the degree to which it won was unexpected. It blew everything else away with 34.4 fps; the other five setups ranged from 1.37 to 12.9 fps. Interesting results, and good to keep in mind for your next machine vision project.

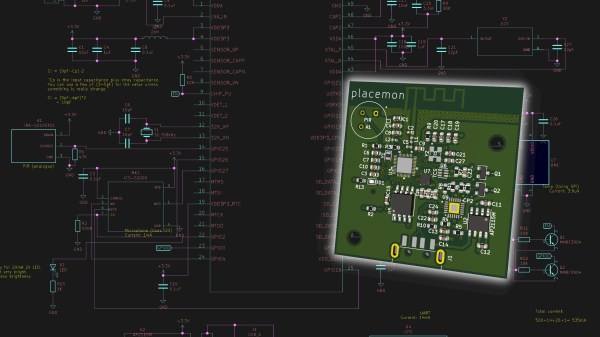

Have you accounted for shrinkage? No, not that shrinkage – shrinkage in your 3D-printed parts. James Clough ran into shrinkage issues with a part that needed to match up to a PCB he made. It didn’t, and he shared a thorough analysis of the problem and its solution. While we haven’t run into this problem yet, we can see how it happened – pretty much everything, including PLA, shrinks as it cools. He simply scaled up the model slightly before printing, which is a good tip to keep in mind.

And finally, if you’ve ever tried to break a bundle of spaghetti in half before dropping it in boiling water, you likely know the heartbreak of multiple breakage – many of the strands will fracture into three or more pieces, with the shorter bits shooting away like so much kitchen shrapnel. Because the world apparently has no big problems left to solve, a group of scientists has now figured out how to break spaghetti into only two pieces. Oh sure, they mask it in paper with the lofty title “Controlling fracture cascades through twisting and quenching”, but what it boils down to is applying an axial twist to the spaghetti before bending. That reduces the amount of bending needed to break the pasta, which reduces the shock that propagates along the strand and causes multiple breaks. They even built a machine to do just that, but since it only breaks a strand at a time, clearly there’s room for improvement. So get hacking!