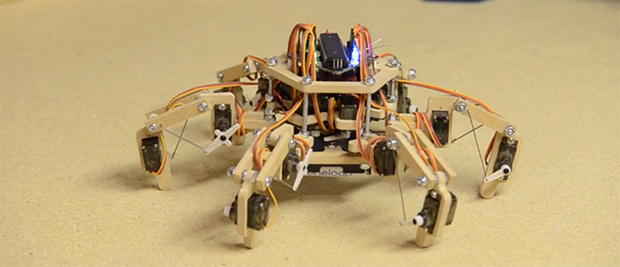

A while back, we had a sci-fi contest on Hackaday.io. Inspired by the replicators in Stargate SG-1, [The Big One] and a few other folk decided a remote-controlled hexapod would be a great build. The contest is long over, but that doesn’t mean development stopped. Now Stubby, the replicator-inspired hexapod is complete and he looks awesome.

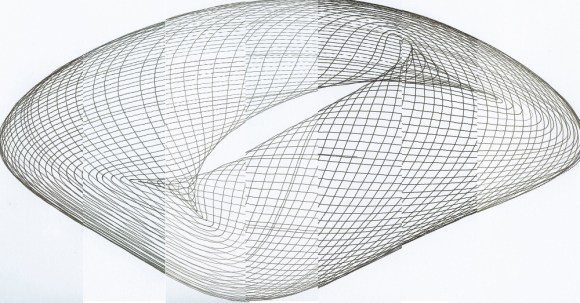

The first two versions suffered from underpowered servos and complex mechanics. Third time’s the charm, and version three is a lightweight robot with pretty simple mechanics able to translate and rotate along the XYZ axes. Stubby only weights about 600 grams, batteries included, so he’s surprisingly nimble as well.

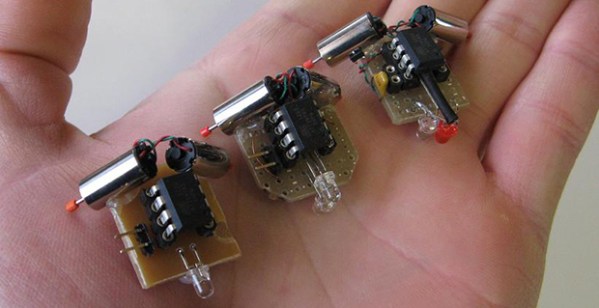

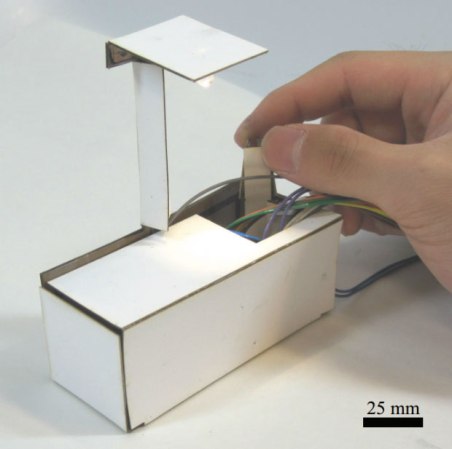

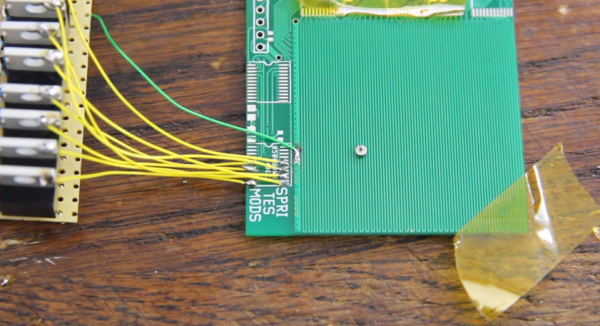

The frame of the hexapod is designed to be cut with a scroll saw, much to the chagrin of anyone without a CNC machine. There are three 9g servos per leg, all controlled with a custom board featuring an ATMega1284p and an XBee interface to an old Playstation controller.

Video of Stubby below, and of course all the sources and files are available on the project site.

Continue reading “Stubby, The Adorable And Easy To Build Hexapod”