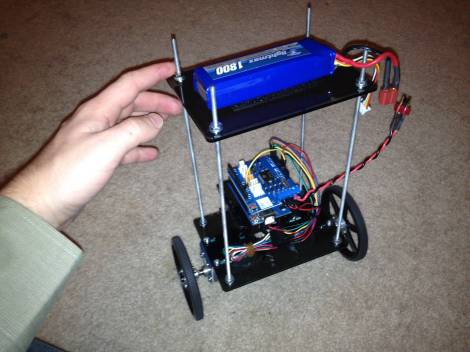

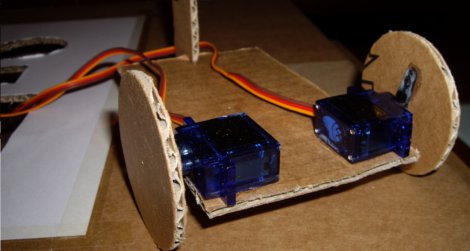

We love cheap stuff here. Who doesn’t? [Oscar Rodriguez Parra] does too, and wrote in to show us his super cheapey robot L.I.O.S. The build was for the AFRON design challenge, which involves building a 10 dollar robot to teach students robotics. The winners of the challenge were neat and all, but they all look too fancy flaunting their molded plastics and electronics breadboards.

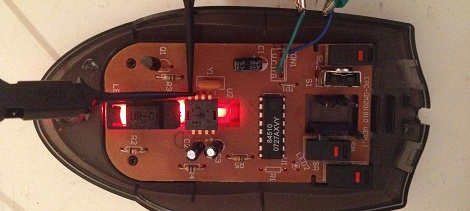

[Oscar’s] design is super simple, LDRs as eyes, a PIC12F683 to do the brainin, LEDs for indicators and a couple modded servos to drive the wheels. An extraordinarily complex cardboard flap roller helps the cart turn, but probably isn’t going to see much aside from smooth flooring. The electronics are mounted using one of our favorite techniques, the paper perf board (very similar to the substrate free technique).

Check out the video after the jump to see LIOS in action. This is an excellent introduction to robotics for any classroom. Thanks [Oscar]!