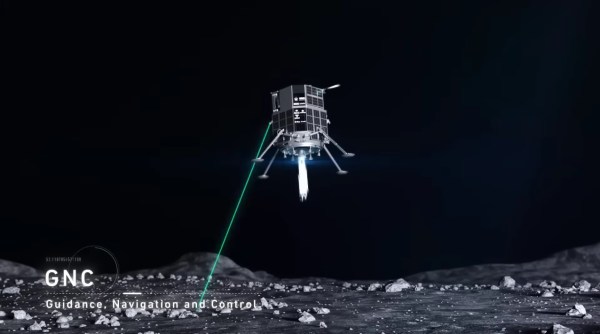

When a computer crashes, it usually doesn’t leave debris. But when a computer happens to be descending towards the lunar surface and glitches out, that’s a very different story. Turns out that’s what happened on April 26th, as the Japanese Hakuto-R Lunar lander made its mark on the Moon…by crashing into it. [Scott Manley] dove in to try and understand the software bug that caused an otherwise flawless mission to go splat.

The lander began the descent sequence as expected at 100 km above the surface. However, as it descended, the altitude sensor reported the altitude as much lower than it was. It thought it was at zero altitude once it reached about 5 km above the surface. Confused by the fact it hadn’t yet detected physical contact with the surface, the craft continued to slowly descend until it ran out of fuel and plunged to the surface.

Ultimately it all came down to sensor fusion. The lander merges several noisy sensors, such as accelerometers, gyroscopes, and radar, into one cohesive source of truth. The craft passed over a particularly large cliff that caused the radar altimeter to suddenly spike up 3 km. Like good filtering software, the craft reasons that the sensor must be getting spurious data and filters it out. It was now just estimating its altitude by looking at its acceleration. As anyone who has tried to track an object through space using just gyros and accelerometers alone can attest, errors accumulate, and suddenly you’re not where you think you are.

We know what you’re thinking: surely they would have run landing simulations to catch errors like these? Ironically they did, it’s just that after the simulations were run, the landing site for Hakuto-R was changed. Unfortunately, nobody thought to re-run the simulations, and now the Moon has a new lawn ornament,

We’ve previously written about why lunar landings are so hard. While knowing what led to the crash will hopefully prevent a similar fate for future missions, the reality is that remotely landing a robot on a dusty world without the help of GPS is fiendishly difficult and likely will be for some time.

Continue reading “The Glitch That Brought Down Japan’s Lunar Lander”