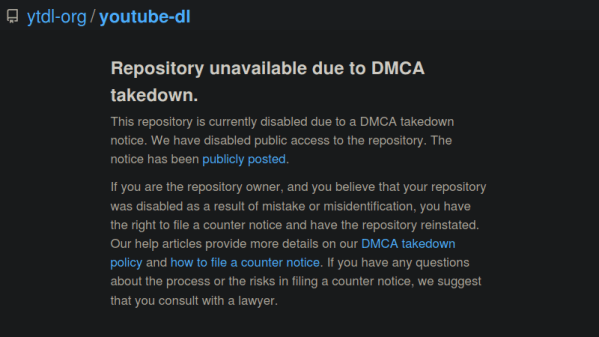

At this point, you’ve likely heard that the GitHub repository for youtube-dl was recently removed in response to a DMCA takedown notice filed by the Recording Industry Association of America (RIAA). As the name implies, this popular Python program allowed users to produce local copies of audio and video that had been uploaded to YouTube and other content hosting sites. It’s a critical tool for digital archivists, people with slow or unreliable Internet connections, and more than a few Hackaday writers.

It will probably come as no surprise to hear that the DMCA takedown and subsequent removal of the youtube-dl repository has utterly failed to contain the spread of the program. In fact, you could easily argue that it’s done the opposite. The developers could never have afforded the amount of publicity the project is currently enjoying, and as the code is licensed as public domain, users are free to share it however they see fit. This is one genie that absolutely won’t be going back into its bottle.

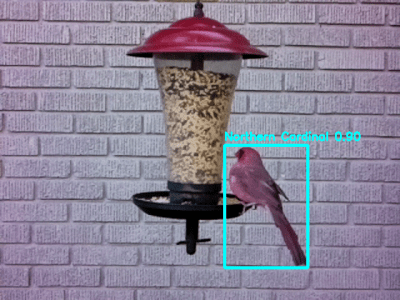

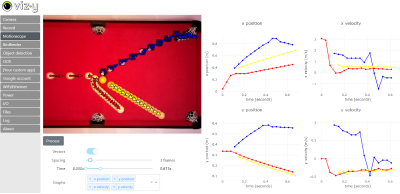

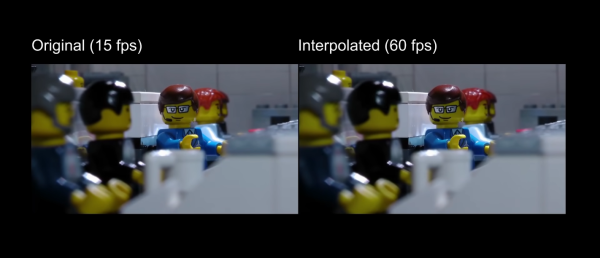

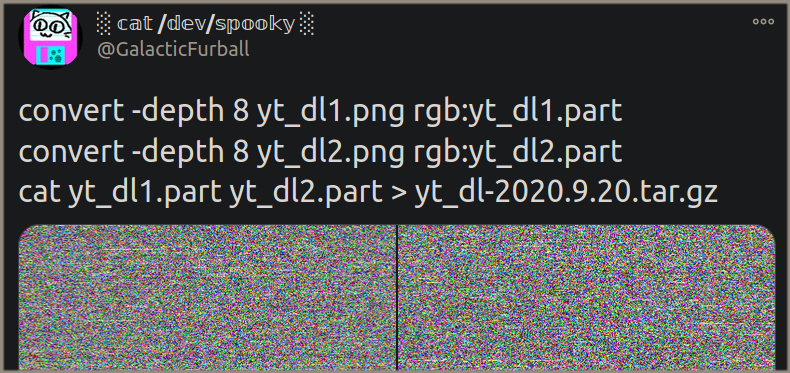

In true hacker spirit, we’ve started to see some rather inventive ways of spreading the outlawed tool. A Twitter user by the name of [GalacticFurball] came up with a way to convert the program into a pair of densely packed rainbow images that can be shared online. After downloading the PNG files, a command-line ImageMagick incantation turns the images into a compressed tarball of the source code. A similar trick was one of the ways used to distribute the DeCSS DVD decryption code back in 2000; though unfortunately, we doubt anyone is going to get the ~14,000 lines of Python code that makes up youtube-dl printed up on any t-shirts.

It’s worth noting that GitHub has officially distanced themselves from the RIAA’s position. The company was forced to remove the repo when they received the DMCA takedown notice, but CEO Nat Friedman dropped into the project’s IRC channel with a promise that efforts were being made to rectify the situation as quickly as possible. In a recent interview with TorrentFreak, Friedman said the removal of youtube-dl from GitHub was at odds with the company’s own internal archival efforts and financial support for the Internet Archive.

But as it turns out, some changes will be necessary before the repository can be brought back online. While there’s certainly some debate to be had about the overall validity of the RIAA’s claim, it isn’t completely without merit. As pointed out in the DMCA notice, the project made use of several automated tests that ran the code against copyrighted works from artists such as Taylor Swift and Justin Timberlake. While these were admittedly very poor choices to use as official test cases, the RIAA’s assertion that the entire project exists solely to download copyrighted music has no basis in reality.

[Ed Note: This is only about GitHub. You can still get the code directly from the source.]