[Bruce Land], professor at Cornell, is a frequent submitter to our tip line. Usually he sends in a few links every semester from undergraduate electronics courses. Now the fall semester is finally over and it’s time to move on to the more ambitious master’s projects.

First up is a head-mounted eye tracker, [Anil Ram Viswanathan] and [Zelan Xiao] put together a lightweight and low-cost eye tracking project that will record where the user is looking.

The eye tracker hardware is made of two cameras mounted on a helmet. The first camera faces forward, looking at the same thing the user is. The second camera is directed towards the user’s eye. A series of algorithms detect the iris of the user’s eye and overlays the expected gaze position on the output of the first camera. Here’s the design report. PDF, natch.

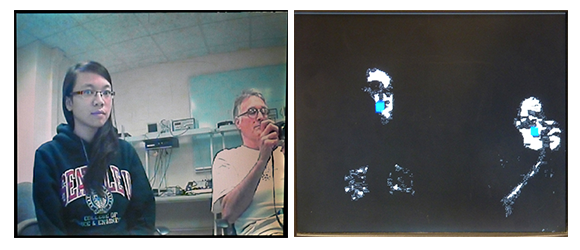

Next up is a face tracking project implemented on an FPGA. This project started out as a software implementation of a face tracking algorithm in MATLAB. [Thu-Thao Nguyen] translated this MATLAB code to Verilog and eventually got her hardware running on an FPGA dev board. Another design report.

Having a face detection and tracking system running on an FPGA is extremely interesting; the FPGA makes face tracking a very low power and hopefully lower-cost solution, allowing it to be used in portable and consumer devices.

You can check out the videos for these projects after the break.