When Amazon released the API to their voice service Alexa, they basically forced any serious players in this domain to bring their offerings out into the hacker/maker market as well. Now Google and Raspberry Pi have come together to bring us ‘Artificial Intelligence Yourself’ or AIY.

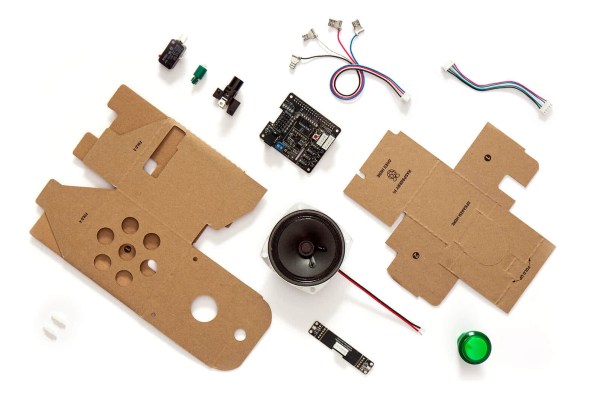

A free hardware kit made by Google was distributed with Issue 57 of the MagPi Magazine which is targeted at makers and hobbyists which you can see in the video after the break. The kit contains a Raspberry Pi Voice Hat, a microphone board, a speaker and a number of small bits to mount the kit on a Raspberry Pi 3. Putting all of it together and following the instruction on the official site gets you a Google Voice Interaction Kit with a bunch of IOs just screaming to be put to good use.

The source code for the python app can be downloaded from GitHub and consists of a loop that awaits a trigger. This trigger can be a press of a button or a clap near the microphones. When a trigger is detected, the recorder function takes over sending the stream to the Google Cloud. Speech-to-Text conversion happens there and the result is returned via a Text-To-Speech engine that helps the system talk back. The repository suggests that the official Voice Kit SD Image (893 MB download) is based on Raspbian so don’t go reflashing a memory card right away, you should be able to add this to an existing install.

Continue reading “Google AIY: Artificial Intelligence Yourself”