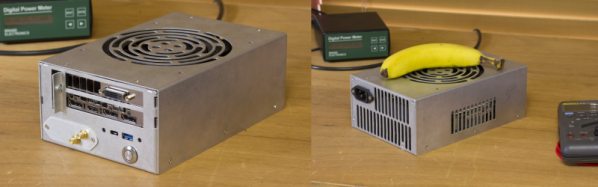

When building a custom computer rig, most people put the SMPS power supply inside the computer case. [James] a.k.a [Aibohphobia] a.k.a [fearofpalindromes] turned it inside out, and built the STX160.0 – a full-fledged gaming computer stuffed inside a ATX power supply enclosure. While Small Form Factor (SFF) computers are nothing new, his build packs a powerful punch in a small enclosure and is a great example of computer modding, hacker ingenuity and engineering. The finished computer uses a Mini-ITX form factor motherboard with Intel i5 6500T quad-core 2.2GHz processor, EVGA GTX 1060 SC graphics card, 16GB DDR4 RAM, 250GB SSD, WiFi card and two USB ports — all powered from a 160 W AC-DC converter. Its external dimensions are the same as an ATX-EPS power supply at 150 L x 86 H x 230 D mm. The STX160.0 is mains utility powered and not from an external brick, which [James] feels would have been cheating.

For those who would like a quick, TL;DR pictorial review, head over to his photo album on Imgur first, to feast on pictures of the completed computer and its innards. But the Devil is in the details, so check out the forum thread for a ton of interesting build information, component sources, tricks and trivia. For example, to connect the graphics card to the motherboard, he used a “M.2 to powered PCIe x4 adapter” coupled with a flexible cable extender from a quaint company called Adex Electronics who still prefer to do business the old-fashioned way and whose website might remind you of the days when Netscape Navigator was the dominant browser.

As a benchmark, [James] posts that “with the cover panel on, at full load (Prime95 Blend @ 2 threads and FurMark 1080p 4x AA) the CPU is around 65°C with the CPU fan going at 1700RPM, and the GPU is at 64°C at 48% fan speed.” Fairly impressive for what could be passed off at first glance as a power supply.

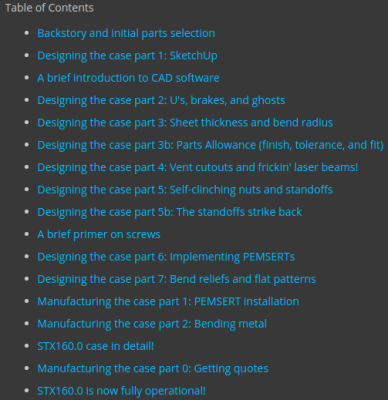

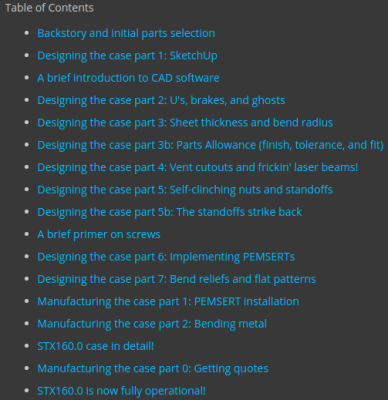

The two really interesting take away’s for us in this project are his meticulous research to find specific parts that met his requirements from among the vast number of available choices. The second is his extremely detailed notes on designing the custom enclosure for this project and make it DFM (design for manufacturing) friendly so it could be mass-produced – just take a look at his “Table of Contents” for a taste of the amount of ground he is covering. If you are interested in custom builds and computer modding, there is a huge amount of useful information embedded in there for you.

The two really interesting take away’s for us in this project are his meticulous research to find specific parts that met his requirements from among the vast number of available choices. The second is his extremely detailed notes on designing the custom enclosure for this project and make it DFM (design for manufacturing) friendly so it could be mass-produced – just take a look at his “Table of Contents” for a taste of the amount of ground he is covering. If you are interested in custom builds and computer modding, there is a huge amount of useful information embedded in there for you.

Thanks to [Arsenio Dev] who posted a link to this hilarious thread on Reddit discussing the STX160.0. Check out a full teardown and review of the STX160.0 by [Not for Concentrate] in the video after the break.

Continue reading “Modder Puts Computer Inside A Power Supply” →

The two really interesting take away’s for us in this project are his meticulous research to find specific parts that met his requirements from among the vast number of available choices. The second is his extremely detailed notes on designing the custom enclosure for this project and make it DFM (design for manufacturing) friendly so it could be mass-produced – just take a look at his “

The two really interesting take away’s for us in this project are his meticulous research to find specific parts that met his requirements from among the vast number of available choices. The second is his extremely detailed notes on designing the custom enclosure for this project and make it DFM (design for manufacturing) friendly so it could be mass-produced – just take a look at his “