There are a ton of inventions out in the world that are almost complete accidents, but are still ubiquitous in our day-to-day lives. Things like bubble wrap which was originally intended to be wallpaper, or even superglue, a plastic compound whose sticky properties were only discovered later on. IBM found themselves in a similar predicament in the 1970s after working on a type of mainframe computer made to be a phone switch. Eventually the phone switch was abandoned in favor of a general-purpose processor but not before they stumbled onto the RISC processor which eventually became the IBM 801.

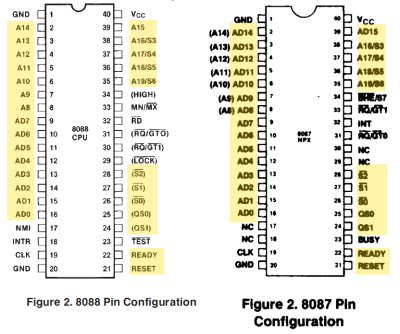

As [Paul] explains, the major design philosophy at the time was to use a large amount of instructions to do specific tasks within the processor. When designing the special-purpose phone switch processor, IBM removed many of these instructions and then, after the project was cancelled, performed some testing on the incomplete platform to see how it performed as a general-purpose computer. They found that by eliminating all but a few instructions and running those without a microcode layer, the processor performance gains were much more than they would have expected at up to three times as fast for comparable hardware.

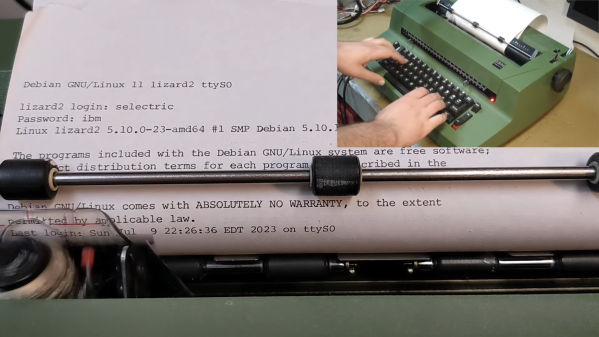

These first forays into the world of simplified processor architecture both paved the way for the RISC platforms we know today such as ARM and RISC-V, but also helped CISC platforms make tremendous performance gains as well. In fact, RISC-V is a direct descendant from these early RISC processors, with three intermediate designs between then and now. If you want to play with RISC-V yourself, our own [Jonathan Bennett] took a look at a recent RISC-V SBC and its software this past March.

Thanks to [Stephen] for the tip!