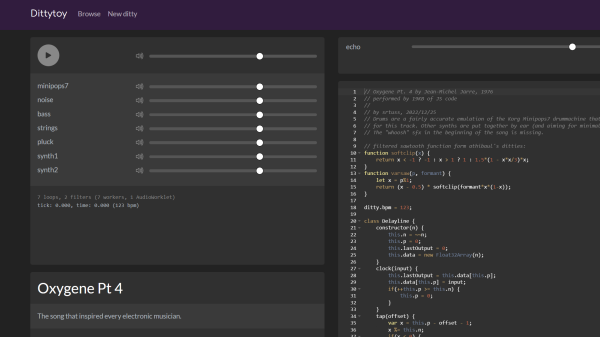

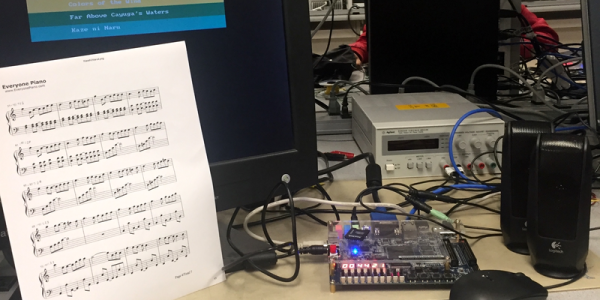

The super talented [Switch Angel] is an electronic music artist, with a few cool YouTube videos to show off their absolute nailing of how to live code with Strudel. For us mere mortals, Strudel is a JavaScript port of TidalCycles, which is an algorithmic music generator which supports live coding, i.e. the music that is passed down to the synthesizer changes on-the-fly as you manipulate the code. It’s magical to watch (and listen!) to how you can adapt and distort the music to your whims just by tweaking a few lines of code: no compilation steps, hardly any debugging and instant results.

The traditional view of music generators like this is to create lists of note/instrument pairs with appropriate modifiers. Each sound is specified in sequence — adding a sound extends the sequence a little. Strudel / Tidalcycles works a little differently and is based on the idea of repeating patterns over a fixed time. Adding an extra sound or breaking down one sound slot into multiple sounds squeezes all the remaining slots down, causing the whole pattern to repeat in the same period, with the sounds individually taking up less space. This simple change makes it really easy to add layer upon layer of interest within a sequence with a few extra characters, without recalculating everything else to fit. On top of this base, multiple effects can be layered—more than we can mention here—and all can be adjusted with pop-in sliders directly in the code.