The idea of having software translation programs around to do things like emulate a Super Nintendo on your $3000 gaming computer or, more practically, run x86 software on a new M1 Mac, seems pretty modern since it is so prevalent in the computer world today. The idea of using software like this is in fact much older and easily traces back into the 80s during the era of Commodore and Atari personal computers. Their hardware was actually not too dissimilar, and with a little bit of patience and know-how it’s possible to compile the Commodore 64 kernel on an Atari, with some limitations.

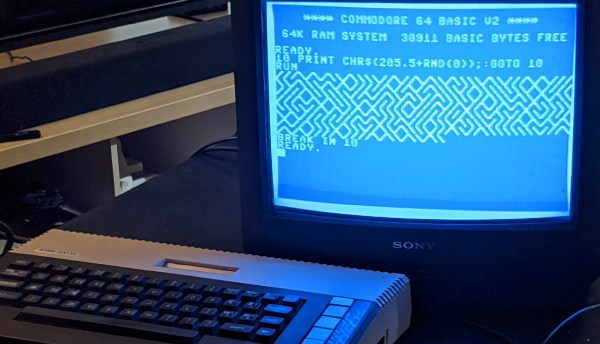

This project comes to us from [unbibium] and was inspired by a recent video he saw where the original Apple computer was emulated on Commodore 64. He took it in a different direction for this build though. The first step was to reformat the C64 code so it would compile on the Atari, which was largely accomplished with a Python script and some manual tweaking. From there he started working on making sure the ROMs would actually run. The memory setups of these two machines are remarkably similar which made this slightly easier, but he needed a few workarounds for a few speed bumps. Finally the cursor and HMIs were configured, and once a few other things were straightened out he has a working system running C64 software on an 8-bit Atari.

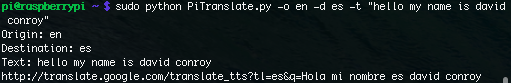

Unsurprisingly, there are a few things that aren’t working. There’s no IO besides the keyboard and mouse, and saving and loading programs is not yet possible. However, [unbibium] has made all of his code available on his GitHub page if anyone wants to expand on his work and may also improve upon this project in future builds. If you’re looking for a much easier point-of-entry for emulating Commodore software in the modern era, though, there is a project available to run a C64 from a Raspberry Pi.

Thanks to [Cprossu] for the tip!