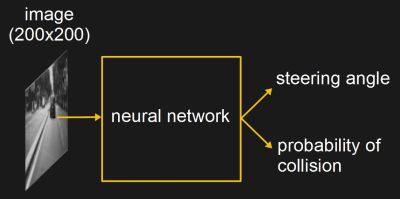

Neural networks are a core area of the artificial intelligence field. They can be trained on abstract data sets and be put to all manner of useful duties, like driving cars while ignoring road hazards or identifying cats in images. Recently, a biologist approached AI researcher [Janelle Shane] with a problem – could she help him name some tomatoes?

It’s a problem with a simple cause – like most people, [Darren] enjoys experimenting with tomato genetics, and thus requires a steady supply of names to designate the various varities produced in this work. It can be taxing on the feeble human brain, so a silicon-based solution is ideal.

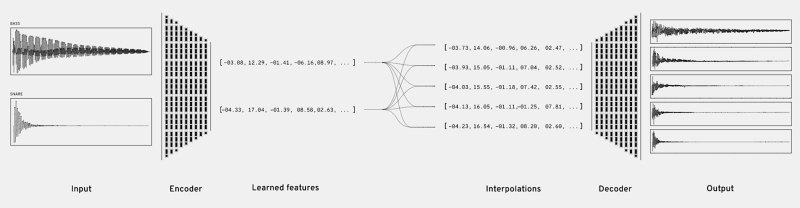

[Janelle] decided to use the char-rnn library built by [Andrej Karpathy] to do the heavy lifting. After training it on a list of over 11,000 existing tomato varieties, the neural network was then asked to strike out on its own.

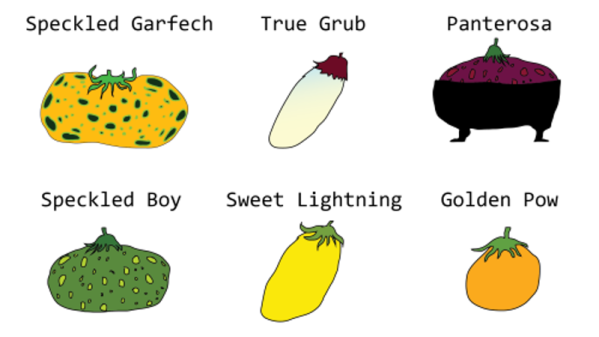

The results are truly fantastic – whether you’re partial to a Speckled Garfech or you prefer the smooth flavor of the Golden Pow, there’s a tomato to suit your tastes. When the network was retrained with additional content in the form of names of metal bands, the results get even better – it’s only a matter of time before Angels of Saucing reach a supermarket shelf near you.

On the surface, it’s a fun project with whimsical output, but fundamentally it highlights how much can be accomplished these days by standing on the shoulders of giants, so to speak. Now, if you need some assistance growing your tomatoes, the machines can help there, too.