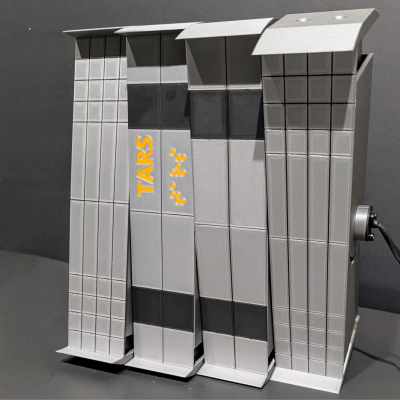

[Aditya Sripada] and [Abhishek Warrier]’s TARS3D robot came from asking what it would take to make a robot with the capabilities of TARS, the robotic character from Interstellar. We couldn’t find a repository of CAD files or code but the research paper for TARS3D explains the principles, which should be enough to inspire a motivated hacker.

What makes TARS so intriguing is the simple-looking structure combined with distinct and effective gaits. TARS is not a biologically-inspired design, yet it can walk and perform a high-speed roll. Making real-world version required not only some inspired mechanical design, but also clever software with machine learning.

What makes TARS so intriguing is the simple-looking structure combined with distinct and effective gaits. TARS is not a biologically-inspired design, yet it can walk and perform a high-speed roll. Making real-world version required not only some inspired mechanical design, but also clever software with machine learning.

[Aditya] and [Abhishek] created TARS3D as a proof of concept not only of how such locomotion can be made to work, but also as a way to demonstrate that unconventional body and limb designs (many of which are sci-fi inspired) can permit gaits that are as effective as they are unusual.

TARS3D is made up of four side-by-side columns that can rotate around a shared central ‘hip’ joint as well as shift in length. In the movie, TARS is notably flat-footed but [Aditya] found that this was unsuitable for rolling, so TARS3D has curved foot plates.

The rolling gait is pretty sensitive to terrain variations, but the walking gait proved to be quite robust. All in all it’s a pretty interesting platform that does more than just show a TARS-like dual gait robot can be made to actually work. It also demonstrates the value of reinforcement learning for robot gaits.

A brief video is below in which you can see the bipedal walk in action. Not that long ago, walking robots were a real challenge but with the tools available nowadays, even a robot running a 5k isn’t crazy.