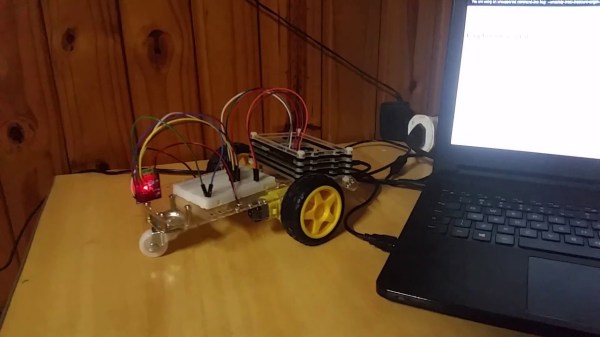

In the world of Internet of Things, it’s easy enough to get something connected to the Internet. But what should you use to communicate with and control it? There are many standards and tools available, but the best choice is always to use the tools you have on hand. [Victor] found himself in this situation, and found that the best way to control an Internet-connected car was to use the Flask server he already had.

The remote controlled car was originally supposed to come with an Arduino, but the microcontroller was missing upon arrival. He had a Raspberry Pi around, and was able to set that up to replace the Arduino. He also took the opportunity to use the expanded functionality of the Pi compared to the Arduino and wrote a Flask server to control it, which is accessed as if you are communicating with a chat bot. Sending the words “go left/forward” to the Flask server will control the car accordingly, for example.

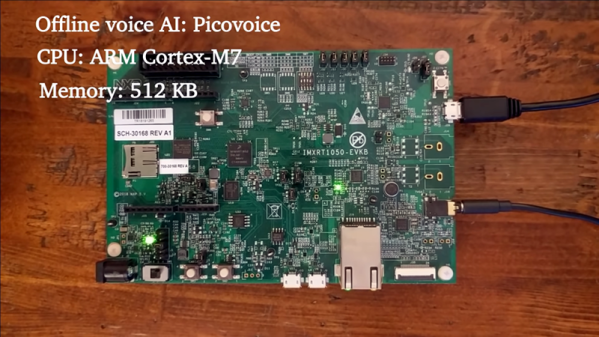

The chat bot itself contains some gems as well, and would be useful for any project that makes use of regular expressions. It also seems to be easily expandable. The project also uses voice commands, and does so by making extensive use of Mozilla’s voice recognition suite. If you want to get deep in the weeds of voice recognition on your own though, you can also explore TensorFlow at your leisure.