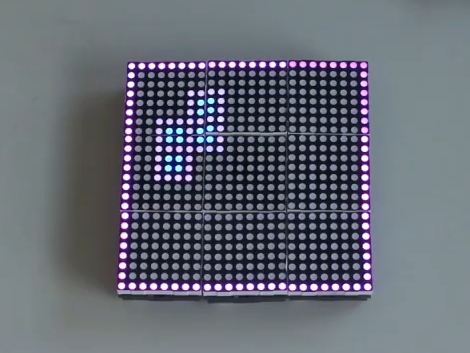

Meet MBLed, a set of interactive 8×8 LED tiles. Put them next to each other and they will orient themselves into a video screen which is the sum of the parts. If this sounds familiar it’s because we’ve seen the concept before in the GLiP project. [Guillaume] tells us that MB Led is the new version of GLiP and from what we’ve seen they’ve made a lot of progress.

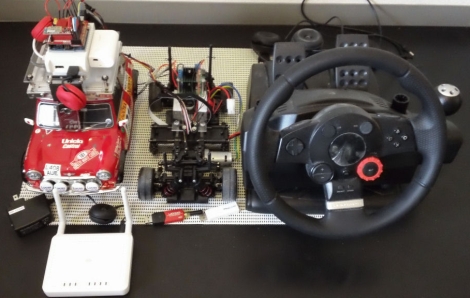

The hardware is well designed. A PCB hosts the STM32 microcontroller and a pair of pin headers which receive the RGB LED matrix module. A pair of AA battery holders make up the legs for the device. Each has infrared receiver/emitter pairs on each of the four edges and constantly polls for its neighbors.

What really impresses us is the algorithms they’re using for communications. FreeRTOS runs on the ARM processors, and a series of messages was developed which allow the blocks to elect a leader, and follow its commands via the distributed system. Check out more about those algorithms on the page linked above, and join us after the break to see the demo video.

Continue reading “MB LED Is Next Generation Of LED Video Block Puzzles”