We were recently tipped off to the work of [Joshua Ellingson], and digging in, we found an extensive collection of art and ongoing experiments, with synthesizers deforming and driving old black-and-white clips played on vintage television sets, objects jumping from screens into the real world and back, and cathode ray tube oscilloscopes drawing graphics in the air (loud sound!) (nitter). It’s recommended that you check out the short showcase videos we embedded below before you continue reading, because transcribing these visuals into words won’t do them justice.

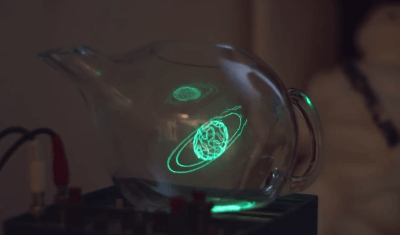

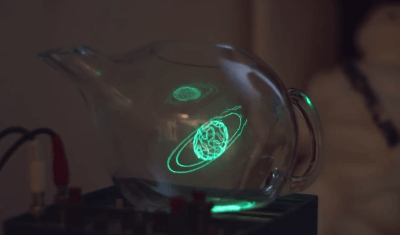

In case you’re not up for a video, however, we shall try transcribing them anyway. Animals, shapes and figures appear in the real world, bound by glass spheres and containers, using the technique known as Pepper’s Ghost. A variety of screens used for creating that illusion – sometimes it’s a tablet, and sometimes it’s an old television set rested upside down on top of a glass aquarium. Vintage television sets are involved quite often in [Ellingson]’s experiments, typically found playing movie scenes and clips from their appropriate eras, or even used as one of the locations that a Pepper’s Ghost-enchanted object could move into — firmly a part of the same imaginary world turned real.

In case you’re not up for a video, however, we shall try transcribing them anyway. Animals, shapes and figures appear in the real world, bound by glass spheres and containers, using the technique known as Pepper’s Ghost. A variety of screens used for creating that illusion – sometimes it’s a tablet, and sometimes it’s an old television set rested upside down on top of a glass aquarium. Vintage television sets are involved quite often in [Ellingson]’s experiments, typically found playing movie scenes and clips from their appropriate eras, or even used as one of the locations that a Pepper’s Ghost-enchanted object could move into — firmly a part of the same imaginary world turned real.

It’s not always that things move from a TV screen into their glass boundary, gaining an extra dimension in the process, but when it happens, the synchronization is impeccable. All of that is backed by — and usually controlled by — Moog synthesizer sounds, knob turns driving video distortions or aspects of an object movement. Not all of his clips have synthesizers, old TVs, or Pepper’s Ghost illusion in them, but every experiment of his contains at least two out of these three, working in unison to create impressions. And as much as the art value is undeniable, [Ellingson] also adds a whole lot of hacker value for us to take away!

[Ellingson] understands what goes into building optical illusions like Pepper’s Ghost — using a variety of different glassware, from Erlenmeyer flasks to teapots, producing a consistent and ongoing stream of new ideas with unique spins on them. His aim is to share and create beyond what his art can achieve, which is why he encourages us to try it out ourselves — with this one minute video of a quick Pepper’s Ghost build, using nothing but a generic tablet, an emptied-out plastic snow globe and a piece of cheap transparency film used for school projectors. If you want to go beyond, he’s made an extensive tutorial on illusions of the kind he does, their simplicities and complexities, and all the different ways you can build one.

[Ellingson] understands what goes into building optical illusions like Pepper’s Ghost — using a variety of different glassware, from Erlenmeyer flasks to teapots, producing a consistent and ongoing stream of new ideas with unique spins on them. His aim is to share and create beyond what his art can achieve, which is why he encourages us to try it out ourselves — with this one minute video of a quick Pepper’s Ghost build, using nothing but a generic tablet, an emptied-out plastic snow globe and a piece of cheap transparency film used for school projectors. If you want to go beyond, he’s made an extensive tutorial on illusions of the kind he does, their simplicities and complexities, and all the different ways you can build one.

We all benefit when an artist finds a technology and starts playing with it, closing the divide between technology and art – and by extension, the divide between technology and nature. Sometimes, it’s flowing light art installations where you are a boulder in route of plankton’s movement, other times, it’s through-hole component-packed printed circuit birds that sing not unlike the non-printed-circuit ones, or manipulation of CRT displays with function generator-driven coils to offset the beam and turn the image into a pattern of lines.

Continue reading “Blending Pepper’s Ghost, Synths, And Vintage TVs” →

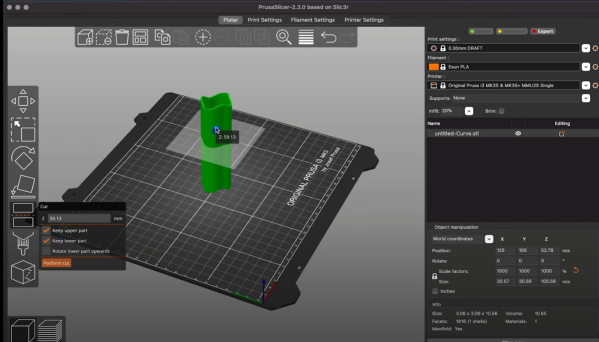

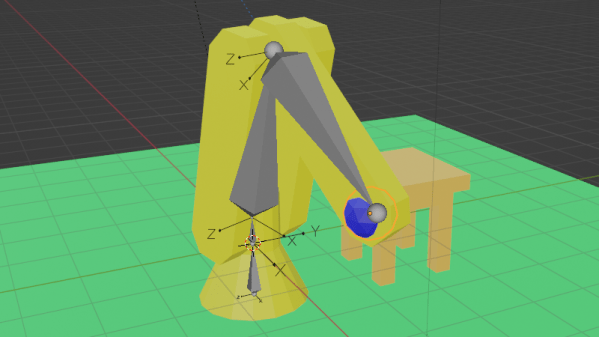

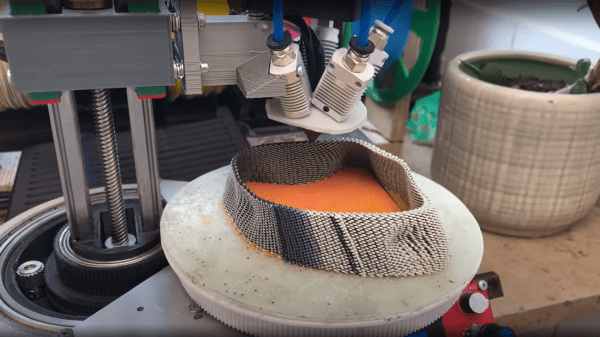

trick is to use the same weight maps and simply map colours to blender text blocks which are injected into the gcode at export time. These gcode blocks can be used swap tool heads or extruders, enabling blending of multiple filament colours or types in the same object.

trick is to use the same weight maps and simply map colours to blender text blocks which are injected into the gcode at export time. These gcode blocks can be used swap tool heads or extruders, enabling blending of multiple filament colours or types in the same object.