The DARPA robotics challenge trials 2013 are have finished up. The big winner is Team Schaft, seen above preparing to drive in the vehicle trial. This isn’t the end of the line for DARPA’s robotics challenge – there is still one more major event ahead. The DARPA robotics finals will be held at the end of 2014. The tasks will be similar to what we saw today, however this time the team and robot’s communications will be intentionally degraded to simulate real world disaster situations. The teams today were competing for DARPA funding. Each of the top eight teams is eligible for, up to $1 million USD from DARPA. The teams not making the cut are still welcome to compete in the finals using other sources of funding.

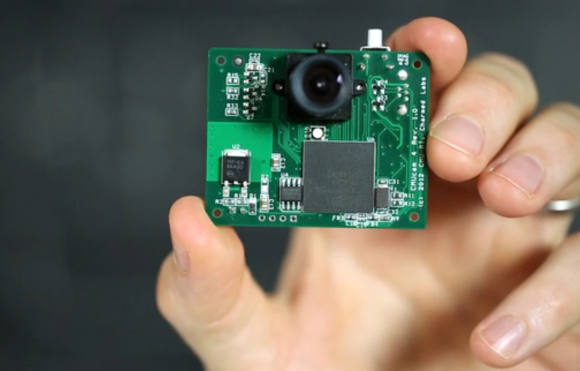

The trials were broken up into 8 events. Door, Debris, Valve, Wall, Hose, Terrain, Ladder, and Vehicle. Each trial was further divided into 3 parts, each with one point available. If a robot completed the entire task with no human intervention it would earn a bonus point. With all bonuses, 32 points were available. Team Schaft won the event with an incredible total of 27 points. In second place was Team IHMC (Institute for Human Machine Cognition) with 20 points. Team IMHC deserves special praise as they were using a DARPA provided Boston Dynamics Atlas Robot. Teams using Atlas only had a few short weeks to go from a completely software simulation to interacting with a real world robot. In third place was Carnegie Mellon University’s Team Tartan Rescue and their Chimp robot with 18 points.

The expo portion of the challenge was also exciting, with first responders and robotics researchers working together to understand the problems robots will face in real world disaster situations. Google’s recent acquisition — Boston Dynamics — was also on hand, running their WildCat and LS3 robots. The only real downside to the competition was the coverage provided by DARPA. The live stream left quite a bit to be desired. The majority of videos on DARPA’s YouTube channel currently consist of 9-10 hour recordings of some of the event cameras. The wrap-up videos also contain very little information on how the robots actually performed during the trials. Hopefully as the days progress, more information and video will come out. For now, please share the timestamp and a description of your favorite part with your comments.

Continue reading “DARPA Robotics Challenge Trials Wrap Up” →