Most readers of Hackaday will be well aware of the current shortages of semiconductors and especially GPUs. Whether you’re planning to build a state-of-the art gaming PC, a mining rig to convert your kilowatt-hours into cryptocoins, or are simply experimenting with machine-learning AI, you should be prepared to shell out quite a bit more money for a proper GPU than in the good old days.

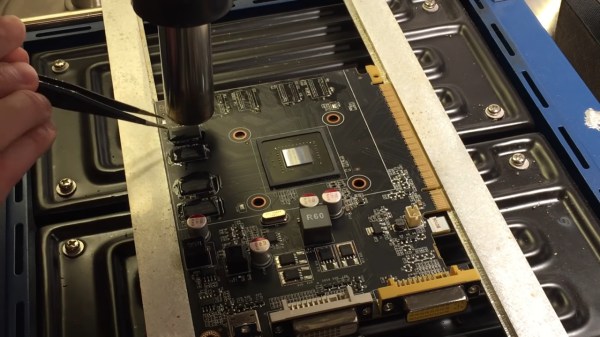

Bargains are still to be had in the second-hand market though. [Devon Bray] chanced upon a pair of Nvidia Tesla K80 cards, which are not suitable for gaming and no longer cost-effective for mining crypto, but ideal for [Devon]’s machine-learning calculations. However, he had to make a modification to enable proper thermal management, as these cards were not designed to be used in regular desktop PCs.

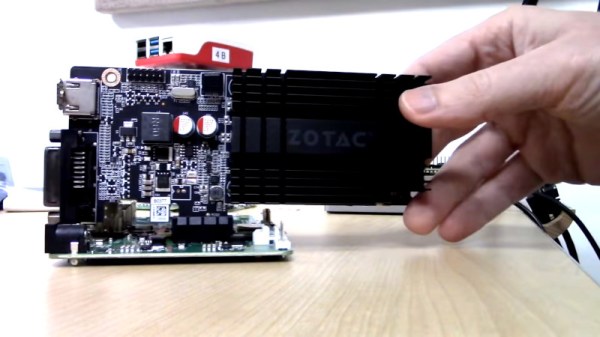

The reason for this is that many professional-grade GPU accelerators are installed in rack-mounted server cases, and are therefore equipped with heat sinks but no fans: the case is meant to provide a forced air flow to carry away the card’s heat. Simply installing the cards into a desktop PC case would cause them to overheat, as passive cooling will not get rid of the 300 W that each card pumps out on full load.

[Devon] decided to make a proper thermal solution by 3D printing a mount that carries three fans along with an air duct that snaps onto the GPU card. In order to prevent unnecessary fan noise, he added a thermal control system consisting of a Raspberry Pi Pico, a handful of MOSFETs, and a thermistor to sense the GPU’s temperature, so the fans are only driven when the card is getting hot. The Pi Pico is of course way more powerful than needed for such a simple task, but allowed [Devon] to program it in MicroPython, using more advanced programming techniques than would be possible on, say, an Arduino.

We love the elegant design of the fan duct, which enables two of these huge cards to fit onto a motherboard side-by-side. We’ve seen people working on the opposite problem of fitting large fans into small cases, as well as designs that discard the whole idea of using fans for cooling.

Continue reading “3D-printed Fan Mount Keeps Server GPU Cool In Desktop Case” →