The Raspberry Pi platform grows more capable and powerful with each iteration. With that said, they’re still not the go-to for high powered computing, and their external interfaces are limited for reasons of cost and scope. Despite this, people like [Jeff Geerling] strive to push the platform to its limits on a regular basis. Unfortunately, [Jeff’s] recent experiments with GPUs hit a hard stop that he’s as yet unable to overcome.

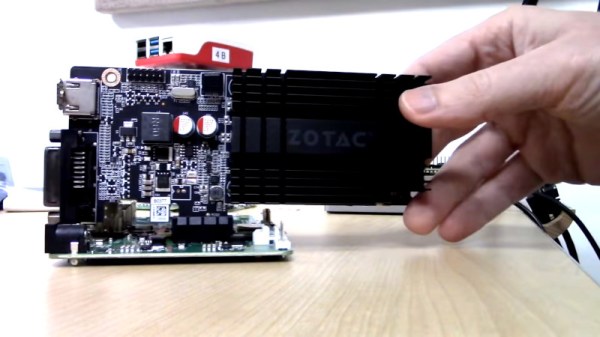

With the release of the new Compute Module 4, the Raspberry Pi ecosystem now has a device that has a PCI-Express 2.0 1x interface as stock. This lead to many questioning whether or not GPUs could be used with the hardware. [Jeff] was determined to find out, buying a pair of older ATI and NVIDIA GPUs to play with.

Immediate results were underwhelming, with no output whatsoever after plugging the modules in. Of course, [Jeff] didn’t expect things to be plug and play, so dug into the kernel messages to find out where the problems lay. The first problem was the Pi’s limited Base Address Space; GPUs need a significant chunk of memory allocated in the BAR to work. With the CM4’s BAR expanded from 64MB to 1GB, the cards appeared to be properly recognised and ARM drivers were able to be installed.

Alas, the story ends for now without success. Both NVIDIA and ATI drivers failed to properly initialise the cards. The latter driver throws an error due to the Raspberry Pi failing to account for the I/O BAR space, a legacy x86 feature, however others suggest the problem may lay elsewhere. While [Jeff] may not have pulled off the feat yet, he got close, and we suspect with a little more work the community will find a solution. Given ARM drivers exist for these GPUs, we’re sure it’s just a matter of time.

For more of a breakdown on the Compute Module 4, check out our comprehensive article. Video after the break.

Continue reading “Trying (And Failing) To Use GPUs With The Compute Module 4” →