There was an all-too-brief period in history where typewriters went from clunky, purely mechanical beasts to streamlined, portable electromechanical devices. But when the 80s came around and the PC revolution started, the typewriting was on the wall for these machines, and by the 90s everyone had a PC, a printer, and Microsoft Word. And thus the little daisy-wheel typewriters began to populate thrift shops all over the world.

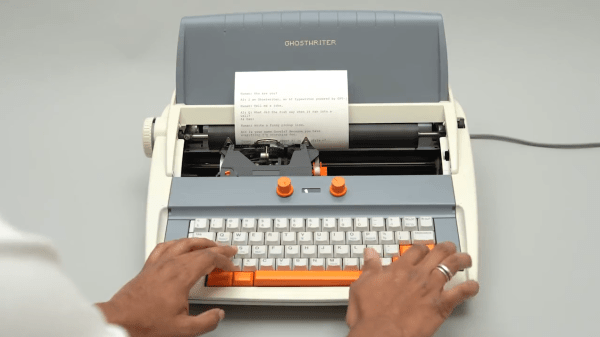

That’s fine with us, because it gave [Arvind Sanjeev] a chance to build “Ghostwriter”, an AI-powered automatic typewriter. The donor machine was a clapped-out Brother electronic typewriter, which needed a bit of TLC to bring it back to working condition. From there, [Arvind] worked out the keyboard matrix and programmed an Arduino to drive the typewriter, both read and write. A Raspberry Pi running the OpenAI Python API for GPT-3 talks to the Arduino over serial, which basically means you can enter a GPT writing prompt with the keyboard and have the machine spit out a dead-tree version of the results.

To mix things up a bit, [Arvind] added a pair of pots to control the creativity and length of the response, plus an OLED screen which seems only to provide some cute animations, which we don’t hate. We also don’t hate the new paint job the typewriter got, but the jury is still out on the “poetry” that it typed up. Eye of the beholder, we suppose.

Whatever you think of GPT’s capabilities, this is still a neat build and a nice reuse of otherwise dead-end electronics. Need a bit more help building natural language AI into your next project? Our own [Donald Papp] will get you up to speed on that.

Continue reading “Giving An Old Typewriter A Mind Of Its Own With GPT-3”