Oftentimes, the feature set for our typical fitness-focused wearables feels a bit empty. Push notifications on your wrist? OK, fine. Counting your steps? Sure, why not. But how useful are those capabilities anyway? Well, what if wearables could be used for a more dignified purpose like helping people in recovery from substance use disorder (SUD)? That’s what the researchers at the University of Massachusetts Medical School aimed to find out.

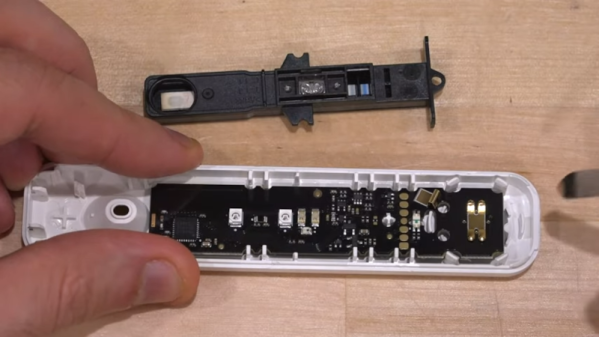

In their paper, they used a wrist-worn wearable to measure locomotion, heart rate, skin temperature, and electrodermal activity of 38 SUD patients during their everyday lives. They wanted to detect periods of stress and craving, as these parameters are possible triggers of substance use. Furthermore, they had patients self-report times during the day when they felt stressed or had cravings, and used those reports to calibrate their model.

They tried a number of classification models such as decision trees, discriminant analysis, logistic regression, and others, but found the most success using support vector machines though they failed to discuss why they thought that was the case. In the end, they found that they could detect stress vs. non-stress with an accuracy of 81.3% and craving vs. no-craving with an accuracy of 82.1%. Not amazing accuracy, but given the dire need for medical advancements for SUD, it’s something to keep an eye on. Interestingly enough, they found that locomotion data alone had an accuracy of approximately 75% when it came to indicating stress and cravings.

Much ado has been made about the insufficient accuracy of wearable devices for medical diagnoses, particularly of those that measure activity and heart rate. Maybe their model would perform better, being trained on real-time measurements of cortisol, a more accurate physiological measure of stress.

Finally, what really stood out to us about this study was how willing patients were to use a wearable in their treatment strategy. It’s sad that society oftentimes has a very negative perception of SUD patients, leading to fewer treatment options for patients. But hopefully, with technological advancements such as this, we’re one step closer to a more equitable future of healthcare.