We were trading stories of our first self-made PCBs in the secret underground Hackaday bunker, and a couple of the boards looked really good for first efforts. Of course there were mistakes and sub-optimal routing, but who among us never connects up the wrong signals or uses a bad footprint? What lead me to have a hacker “kids these days have it so easy” moment was that all of the boards were, of course, professionally fabbed with nice silkscreens. They all looked great.

What a glorious time to be starting down the hardware path! When I made my first PCB, the options were basically laying down tape, pulling out the etch resist pen, or paying a bazillion inflation-adjusted dollars for a rapid prototype board. This meant that the aspiring hacker also had to have a steady hand and be at least casually acquainted with a little chemistry. The ability to just send your files out to a PCB house means that the barrier to stepping up your hardware game from plug-them-together modules is lower than it’s ever been.

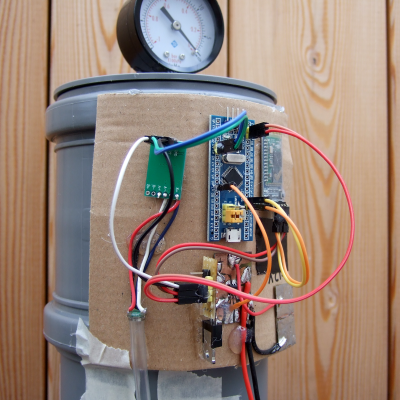

But if scratching or etching your own PCB out of copper plate is very hands-on, very DIY, and very low-tech, it’s also very fast in comparison to even the most rushed service. Last weekend, I needed a breakout board for some eight-pin SOIC H-bridge chips for a turtle robot project with my son. Everything was hand-soldered and hot-glued in a Saturday afternoon and evening, so there was no time for a PCB order. A perfect opportunity for the Old Ways™.

We broke out a Sharpie, traced out where the SOIC pins would land, connected up the grounds, brought the signals out to friendly pads, and then covered the rest of the board in islands of copper just in case we’d need any prototyping space later. Of course, some of the ink lines touched each other where they shouldn’t, but before the copper meets the etchant it’s easy enough to scrape the spaces clear with a pin. The results? My boards look like they were chiseled out by a caveman, but they worked. And more importantly, we got it done within the attention span of a second grader without firing up a computer.

We broke out a Sharpie, traced out where the SOIC pins would land, connected up the grounds, brought the signals out to friendly pads, and then covered the rest of the board in islands of copper just in case we’d need any prototyping space later. Of course, some of the ink lines touched each other where they shouldn’t, but before the copper meets the etchant it’s easy enough to scrape the spaces clear with a pin. The results? My boards look like they were chiseled out by a caveman, but they worked. And more importantly, we got it done within the attention span of a second grader without firing up a computer.

So revel in your cheap offshore PCB factories, hackers of today! It’s a miracle that even four-layer boards come back within a week without breaking the bank. But I encourage you all to try it out by hand as well. For large enough packages and one-offs, full DIY absolutely has the speed advantage, but there’s also a certain wabi sabi to the hand-drawn board. Like brush strokes in residual copper.