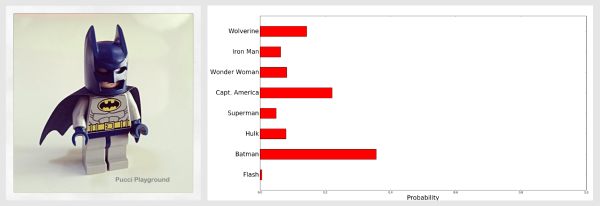

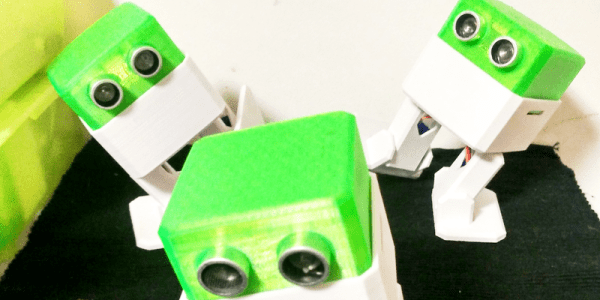

[Massimiliano Patacchiola] writes this handy guide on using a histogram intersection algorithm to identify different objects. In this case, lego superheroes. All you need to follow along are eyes, Python, a computer, and a bit of machine learning magic.

He gives a good introduction to the idea. You take a histogram of the colors in a properly cropped and filtered photo of the object you want to identify. You then feed that into a neural network and train it to identify the different superheroes by color. When you feed it a new image later, it will compare the new image’s histogram to its model and output confidences as to which set it belongs.

This is a useful thing to know. While a lot of vision algorithms try to make geometric assertions about the things they see, adding color to the mix can certainly help your friendly robot project recognize friend from foe.