When you think of tracked robots, you might think of bomb disposal robots or others used in military applications. You probably haven’t seen anything quite like this, however—it’s a “reconfigurable continuous track robot” from researchers [Tal Kislasi] and [David Zarrouk (via IEEE Spectrum).

The robot looks simple, like some kind of tracked worm. As its motors turn, the track moves along as you would expect, propelling the robot along the ground. Its special feature, though, is that the track can bend itself up and down, just like a snake might as it rises up to survey a given area.

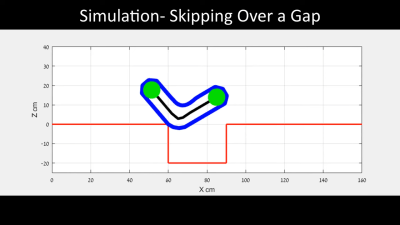

The little tracked robot can thus tilt itself up to climb steps, and even bend itself over small obstacles. It can even try and hold itself up high as it inches along to try and bridge its way over a gap.

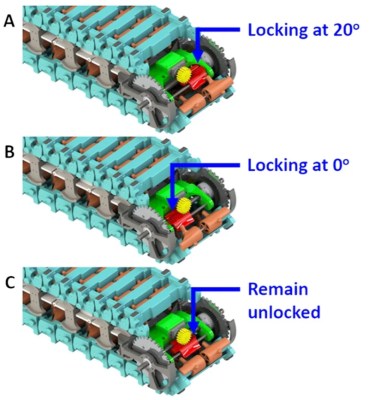

How does it achieve this? Well, the robot is able to selectively lock the individual links of its outer track in various orientations. As the links pass over the front of the robot, a small actuator is used to lock each link in a 20-degree orientation, or a straight orientation, or leave them loose.

The ability to lock multiple links into a continuous rigid structure allows the robot to rise up from the ground, form itself into a stiff beam, or conform to the ground as desired. A mechanism at the back of the robot unlatches the links as they pass by so the robot retains flexibility as it moves along.

It’s a nifty design, and one we’d like to see implemented on a more advanced tracked robot. We’ve explained the benefits of tracked drivetrains before, too.

Continue reading “Reconfigurable Tracked Robot Has Some Neat Flexible Abilities”