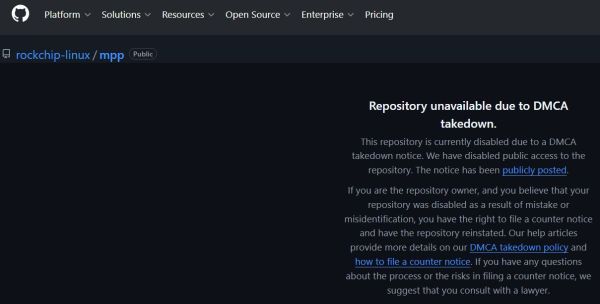

Recently GitHub disabled the Rockchip Linux MPP repository, following a DMCA takedown request from the FFmpeg team. As of writing the affected repository remains unavailable. At the core of this issue is the Rockchip MPP framework, which provides hardware-accelerated video operations on Rockchip SoCs. Much of the code for this was lifted verbatim from FFmpeg, with the allegation being that this occurred with the removal of the original copyright notices and authors. The Rockchip MPP framework was further re-licensed from LGPL 2.1 to the Apache license.

Most egregious of all is perhaps that the FFmpeg team privately contacted Rockchip about this nearly two years ago, with clearly no action taken since. Thus FFmpeg demands that Rockchip either undoes these actions that violate the LGPL, or remove all infringing files.

This news and further context is also covered by [Brodie Robertson] in a video. What’s interesting is that Rockchip in public communications and in GitHub issues are clearly aware of this license issue, but seem to defer dealing with it until some undefined point in the future. Clearly that was the wrong choice by Rockchip, though it remains a major question what will happen next. [Brodie] speculates that Rockchip will keep ignoring the issue, but is hopeful that he’ll be proven wrong.

Unfortunately, these sort of long-standing license violations aren’t uncommon in the open source world.

Continue reading “GitHub Disables Rockchip’s Linux MPP Repository After DMCA Request”