The modern era of VR started a long time ago, and a wide range of commercial headsets have proliferated on the market since then. If you don’t want to buy off the shelf, though, you could always follow [Manolo]’s example and build your own.

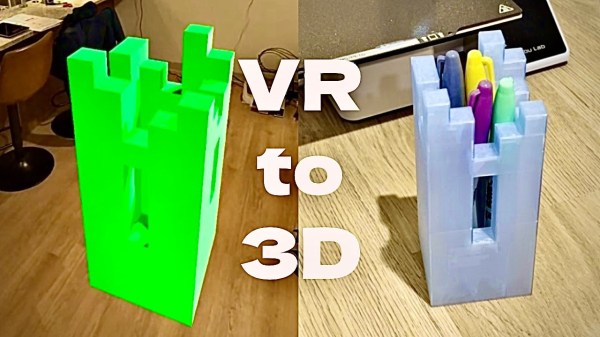

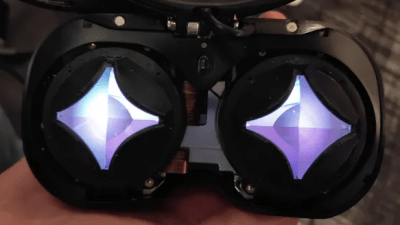

This DIY headset is known as the Persephone 3 Lite, and is intended for use with SteamVR. It’s got the requisite motion tracking thanks to a Raspberry Pi Pico, paired with an MPU6500 inertial measurement unit. As for the optics, the headset relies on a pair of 2.9-inch square displays that operate at a resolution of 1440 x 1440 with a refresh rate up to 90 Hz. They’re paired with cheap Fresnel lenses sourced from Aliexpress for a few dollars. Everything is wrapped up in a custom 3D-printed housing that holds all the relevant pieces in the right place so that your eyes can focus on both screens at once. The head strap is perhaps the only off-the-shelf piece, sourced from a Quest 2 device.

If you’re eager to recreate this build at home, files are available over on [Manolo’s] Patreon page for subscribers. We’ve featured some other DIY headset builds before, too. Video after the break.