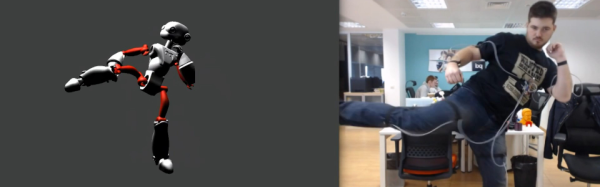

The web is abuzz with the news that the Facebook-owned Oculus Rift has buried in its terms of service a clause allowing the social media giant access to the “physical movements and dimensions” of its users. This is likely to be used for the purposes of directing advertising to those users and most importantly for the advertisers, measuring the degree of interaction between user and advert. It’s a dream come true for the advertising business, instead of relying on eye-tracking or other engagement studies on limited subsets of users they can take these metrics from their entire user base and hone their offering on an even more targeted basis for peak interaction to maximize their revenue.

Hardly a surprise you might say, given that Facebook is no stranger to criticism on privacy matters. It does however represent a hitherto unseen level of intrusion into a user’s personal space, even to guess the nature of their activities from their movements, and this opens up fresh potential for nefarious uses of the data.

Fortunately for us there is a choice even if our community doesn’t circumvent the data-slurping powers of their headsets; a rash of other virtual reality products are in the offing at the moment from Samsung, HTC, and Sony among others, and of course there is Google’s budget offering. Sadly though it is likely that privacy concerns will not touch the non-tech-savvy end-user, so competition alone will not stop the relentless desire from big business to get this close to you. Instead vigilance is the key, to spot such attempts when they make their way into the small print, and to shine a light on them even when the organisations in question would prefer that they remained incognito.

Oculus Rift development kit 2 image: By Ats Kurvet – Own work, CC BY-SA 4.0, via Wikimedia Commons.