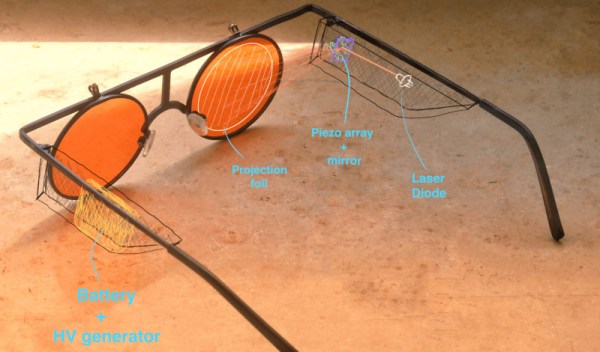

If you’re into pushing tech boundaries from home, this one’s for you. Redditor [mi_kotalik] has crafted ‘Zero’, a custom pair of DIY augmented reality (AR) glasses using a Raspberry Pi Zero. Designed as an affordable, self-contained device for displaying simple AR functions, Zero allows him to experiment without breaking the bank. With features like video playback, Bluetooth audio, a teleprompter, and an image viewer, Zero is a testament to what can be done with determination and creativity on a budget. The original Reddit thread includes videos, a build log, and links to documentation on X, giving you an in-depth look into [mi_kotalik]’s journey. Take a sneak peek through the lens here.

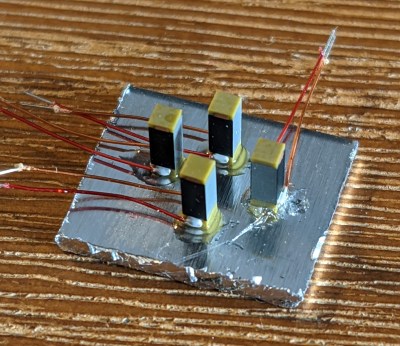

![[miko_tarik] wearing diy AR goggles](https://hackaday.com/wp-content/uploads/2024/11/diy-ar-goggles-smallphoto.jpg?w=400) Creating Zero wasn’t simple. From designing the frame in Tinkercad to experimenting with transparent PETG to print lenses (ultimately switching to resin-cast lenses), [mi_kotalik] faced plenty of challenges. By customizing SPI displays and optimizing them to 60 FPS, he achieved an impressive level of real-time responsiveness, allowing him to explore AR interactions like never before. While the Raspberry Pi Zero’s power is limited, [mi_kotalik] is already planning a V2 with a Compute Module 4 to enable 3D rendering, GPS, and spatial tracking.

Creating Zero wasn’t simple. From designing the frame in Tinkercad to experimenting with transparent PETG to print lenses (ultimately switching to resin-cast lenses), [mi_kotalik] faced plenty of challenges. By customizing SPI displays and optimizing them to 60 FPS, he achieved an impressive level of real-time responsiveness, allowing him to explore AR interactions like never before. While the Raspberry Pi Zero’s power is limited, [mi_kotalik] is already planning a V2 with a Compute Module 4 to enable 3D rendering, GPS, and spatial tracking.

Zero is an inspiring example for tinkerers hoping to make AR tech more accessible, especially after the fresh news of both Meta and Apple cancelling their attempts to venture in the world of AR. If you are into AR and eager to learn from an original project like this one, check out the full Reddit thread and explore Hackaday’s past coverage on augmented reality experiments.

Continue reading “Pi Zero To AR: Building DIY Augmented Reality Glasses”

![[miko_tarik] wearing diy AR goggles in futuristic setting](https://hackaday.com/wp-content/uploads/2024/11/diy-ar-goggles-1200.jpg?w=600&h=450)