The HTC Vive is a virtual reality system designed to work with Steam VR. The system seeks to go beyond just a headset in order to make an entire room a virtual reality environment by using two base stations that track the headset and controller in space. The hardware is very exciting because of the potential to expand gaming and other VR experiences, but it’s already showing significant potential for hackers as well — in this case with robotics location and navigation.

Autonomous robots generally utilize one of two basic approaches for locating themselves: onboard sensors and mapping to see the world around it (like how you’d get your bearings while hiking), or sensors in the room which tell the robot where it is (similar to your GPS telling you where you are in the city). Each method has its strengths and weaknesses, of course. Onboard sensors are traditionally expensive if you need very accurate position data, and GPS location data is far too inaccurate to be of use on a smaller scale than city streets.

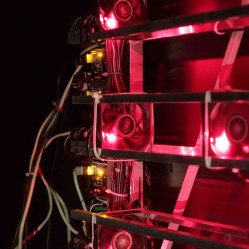

[Limor] immediately saw the potential in the HTC Vive to solve this problem, at least for indoor applications. Using the Vive Lighthouse base stations, he’s able to locate the system’s controller in 3D space to within 0.3mm. He’s then able to use this data on a Linux system and integrate it into ROS (Robot Operating System). [Limor] hasn’t yet built a robot to utilize this approach, but the significant cost savings ($800 for a complete Vive, but only the Lighthouses and controller are needed) is sure to make this a desirable option for a lot of robot builders. And, as we’ve seen, integrating the Vive hardware with DIY electronics should be entirely possible.

Continue reading “HTC Vive Gives Autonomous Robots Direction”

Next [Vlad] took to the water. His first attempt was a home-built airboat, which looked awesome but unfortunately didn’t work very well. Finally he ended up buying a $20 boat off of eBay and made a MOSFET-based motor controller to drive its dual thrusters. This design worked much better and after a bit of PID tuning, the boat was autonomously navigating between waypoints in the water. In the future [Vlad] plans to use the skills he learned on this project to make an autopilot for the

Next [Vlad] took to the water. His first attempt was a home-built airboat, which looked awesome but unfortunately didn’t work very well. Finally he ended up buying a $20 boat off of eBay and made a MOSFET-based motor controller to drive its dual thrusters. This design worked much better and after a bit of PID tuning, the boat was autonomously navigating between waypoints in the water. In the future [Vlad] plans to use the skills he learned on this project to make an autopilot for the