Synthetic-aperture radar, in which a moving radar is used to simulate a very large antenna and obtain high-resolution images, is typically not the stuff of hobbyists. Nobody told that to [Henrik Forstén], though, and so we’ve got this bicycle-mounted synthetic-aperture radar project to marvel over as a result.

Neither the electronics nor the math involved in making SAR work is trivial, so [Henrik]’s comprehensive write-up is invaluable to understanding what’s going on. First step: build a 6-GHz frequency modulated-continuous wave (FMCW) radar, a project that [Henrik] undertook some time back that really knocked our socks off. His FMCW set is good enough to resolve human-scale objects at about 100 meters.

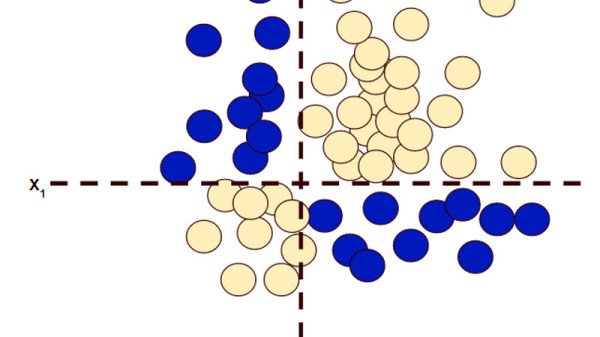

Moving the radar and capturing data along a path are the next steps and are pretty simple, but figuring out what to do with the data is anything but. [Henrik] goes into great detail about the SAR algorithm he used, called Omega-K, a routine that makes use of the Fast Fourier Transform which he implemented for a GPU using Tensor Flow. We usually see that for neural net applications, but the code turned out remarkably detailed 2D scans of a parking lot he rode through with the bike-mounted radar. [Henrik] added an auto-focus routine as well, and you can clearly see each parked car, light pole, and distant building within range of the radar.

We find it pretty amazing what [Henrik] was able to accomplish with relatively low-budget equipment. Synthetic-aperture radar has a lot of applications, and we’d love to see this refined and developed further.

[via r/electronics]