Virtual reality doesn’t feel very real if your head is the only thing receiving the virtual treatment. For truly immersive experiences you must be able to use your body, and even interact with virtual props, in an intuitive way. For instance, in a first-person shooter you want to be able to hold the gun and use it just as you would in real reality. That’s exactly what [matthewhallberg] managed to do for just a few bucks.

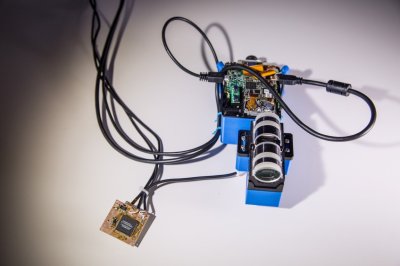

This project is an attempt to develop a VR shooting demo and the associated hardware on a budget, complete with tracking so that the gun can be aimed independent of the user’s view. [matthewhallberg] calls it The Oculus Cardboard Project, named for the combined approach of using a Google Cardboard headset for the VR part, and camera-based object tracking for the gun portion. The game was made in Unity 3D with the Vuforia augmented reality plugin. Not counting a smartphone and Google Cardboard headset, the added parts clocked in at only about $15.

Using corrugated cardboard and a printout, [matthewhallberg] created a handheld paddle-like device with buttons that acts as both controller and large fiducial marker for the smartphone camera. Inside the handle is a battery and an ESP8266 microcontroller. The buttons on the paddle allow for “walk forward” as well as “shoot” triggers. The paddle represents the gun, and when you move it around, the smartphone’s camera tracks the orientation so it’s possible to move and point the gun independent of your point of view. You can see it in action in the video below.

Using corrugated cardboard and a printout, [matthewhallberg] created a handheld paddle-like device with buttons that acts as both controller and large fiducial marker for the smartphone camera. Inside the handle is a battery and an ESP8266 microcontroller. The buttons on the paddle allow for “walk forward” as well as “shoot” triggers. The paddle represents the gun, and when you move it around, the smartphone’s camera tracks the orientation so it’s possible to move and point the gun independent of your point of view. You can see it in action in the video below.

Tracking a handheld paddle with a fiducial marker isn’t a brand new idea; We were able to find this project for example which also very cleverly simulates a trigger input by making a trigger physically alter the paddle shape when you squeeze it. The fiducial is altered by the squeeze, and the camera sees the change and registers it as an input. However, [matthewhallberg]’s approach of using hardware buttons does allow for a wider variety of reliable inputs (move and shoot instead of just move, for example). If you’re interesting in trying it out, the project page has all the required details and source code.

This isn’t [matthewhallberg]’s first attempt and getting the most out of an economical Google Cardboard setup. He used some of the ideas and parts from his earlier DIY Virtual Reality Snowboard project.

Continue reading “Dirt Cheap VR Gun With Tracking For $15 Of Added Hardware”