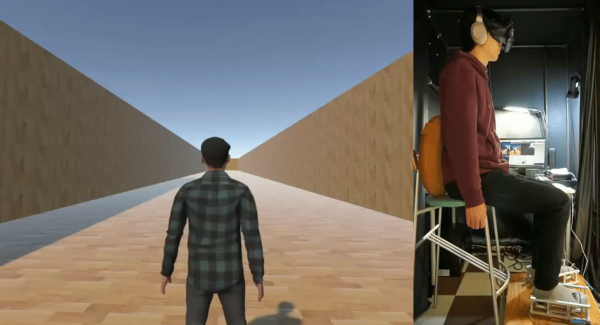

Virtual reality has seen enormous progress in the past few years. Given its recent surges in development, it may come as a bit of a surprise to learn that the ideas underpinning what we now call VR were laid way back in the 60s. Not all of the imagined possibilities have come to pass, but we’ve learned plenty about what is (and isn’t) important for a compelling VR experience, and gained insights as to what might happen next.

If virtual reality’s best ideas came from the 60s, what were they, and how did they turn out?

Interaction and Simulation

First, I want to briefly cover two important precursors to what we think of as VR: interaction and simulation. Prior to the 1960s, state of the art examples for both were the Link Trainer and Sensorama.

The Link Trainer was an early kind of flight simulator, and its goal was to deliver realistic instrumentation and force feedback on aircraft flight controls. This allowed a student to safely gain an understanding of different flying conditions, despite not actually experiencing them. The Link Trainer did not simulate any other part of the flying experience, but its success showed how feedback and interactivity — even if artificial and limited in nature — could allow a person to gain a “feel” for forces that were not actually present.

Sensorama was a specialized pod that played short films in stereoscopic 3D while synchronized to fans, odor emitters, a motorized chair, and stereo sound. It was a serious effort at engaging a user’s senses in a way intended to simulate an environment. But being a pre-recorded experience, it was passive in nature, with no interactive elements.

Combining interaction with simulation effectively had to wait until the 60s, when the digital revolution and computers provided the right tools.

The Ultimate Display

In 1965 Ivan Sutherland, a computer scientist, authored an essay entitled The Ultimate Display (PDF) in which he laid out ideas far beyond what was possible with the technology of the time. One might expect The Ultimate Display to be a long document. It is not. It is barely two pages, and most of the first page is musings on burgeoning interactive computer input methods of the 60s.

The second part is where it gets interesting, as Sutherland shares the future he sees for computer-controlled output devices and describes an ideal “kinesthetic display” that served as many senses as possible. Sutherland saw the potential for computers to simulate ideas and output not just visual information, but to produce meaningful sound and touch output as well, all while accepting and incorporating a user’s input in a self-modifying feedback loop. This was forward-thinking stuff; recall that when this document was written, computers weren’t even generating meaningful sounds of any real complexity, let alone visual displays capable of arbitrary content. Continue reading “All The Good VR Ideas Were Dreamt Up In The 60s” →