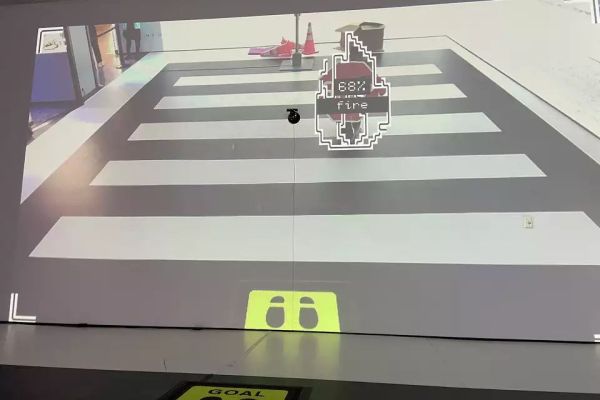

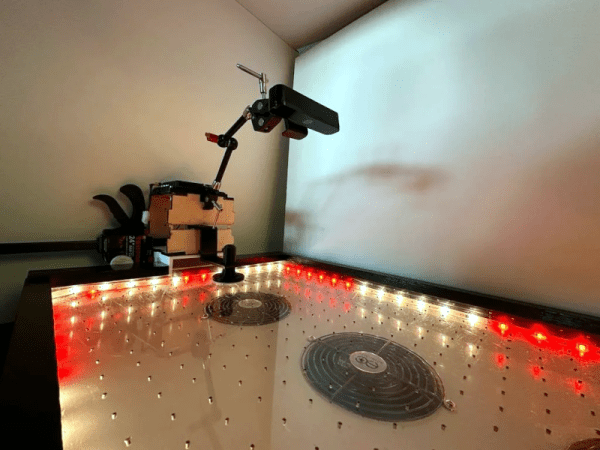

In the discussions about how dangerous self-driving cars are – or aren’t – one thing is sorely missing, and that is an interactive game in which you do your best to not be recognized as a pedestrian and subsequently get run over. Even if this is a somewhat questionable take, there’s something to be said for the interactive display over at the Asian Art Museum in San Francisco which has you try to escape the tyranny of machine-vision and get recognized as a crab, traffic cone, or something else that’s not pedestrian-shaped.

The display ran from March 21st to March 23rd, with [Stephen Council] of SFGate having a swing at the challenge. As can be seen in the above image, he managed to get labelled as ‘fire’ during one attempt while hiding behind a stop sign as he walked the crossing. Other methods include crawling and (ab)using a traffic cone.

Created by [Tomo Kihara] and [Daniel Coppen], it’s intended to be a ‘playful, engaging game installation’. Both creators make it clear that self-driving vehicles which use LIDAR and other advanced detection methods are much harder to fool, but given how many Teslas are on the road using camera-based systems, it’s still worth demonstrating the shortcomings of the technology.

There’s no shortage of debate about whether or not autonomous vehicles are ready to share the roads with human drivers, especially when they exhibit unusual behavior. We’ve already seen protesters attempt to confuse self-driving systems with methods that aren’t far removed from what [Kihara] and [Coppen] have demonstrated here, and it seems likely such antics will only become more common with time.