[Chris Gammell] moderated the KiCad 2022 End-of-Year Recap with several KiCad developers and librarians. They reviewed what’s been bubbling up in the nightly KiCad 6 builds, what we can expect from KiCad 7, and even answered some questions from the user community. Over the course of 2022, the KiCad project has grown both its development team and library team. The project even has a preliminary support commitment from the CERN Drawing Office!

Improvements to the KiCad Schematic Editor include smart wire dragging that simplifies moving components around within schematic diagrams. Components selected in the schematic now remain selected while switching to the PCB Editor. Internal documentation of schematics has advanced with support for fonts, embedded graphics, and the inclusion of hypertext links to datasheets and other reference materials. New features for PDF generation offer interactive files and links between sheets.

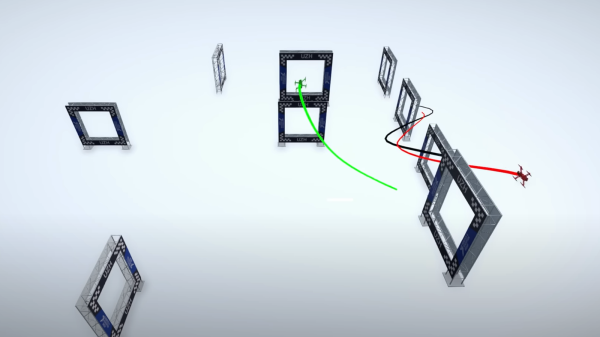

A new search panel within the KiCad PCB Editor supports finding components by footprint, net, or text search. A property panel allows common properties to be edited across multiple selected items. While a full-blown auto-router remains outside of the scope for KiCad, “push and shove” routing is faster and easier. An “attempt to finish” feature routes a quick connection for the currently selected trace, and “pack and move” positions all selected footprints into proximity to simplify placing them as neighbors within the board layout.

The KiCad PCB Editor also adds support for the use of fonts and inverted “knockout text” which even works on copper zones. Bitmap graphics can be imported and scaled beneath layout work as reference illustrations. Private footprint layers can be used to place extra documentation within footprints. The design rule checker (DRC) now can catch more layout issues, especially those that may impact manufacturability.

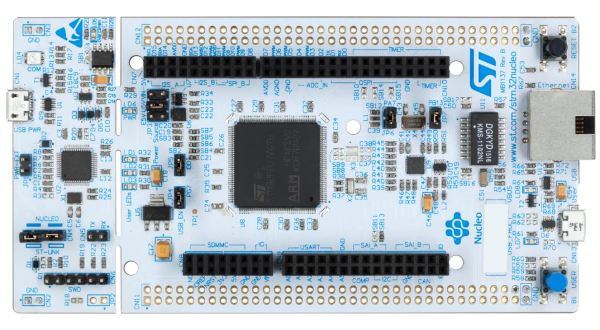

These are just a sampling of the impressive improvements we can expect with KiCad 7.0. There are also additions to circuit simulation and modeling features, a new command line interface for script-based automation, ARM64 support for KiCad running on Apple silicon, and a huge number of additions to the default library including symbols, footprints, and 3D Viewer models.

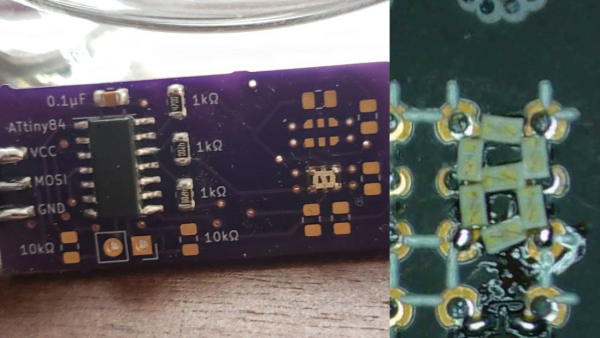

The KiCad team suggests several ways to support the project. There are always needs for additional developers and librarians. Financial contributions can be made at kicad.org. As users, we can run the nightly builds, try to break them, and give feedback in the form of detailed bug reports. Community testing will help make KiCad 7.0 as solid as possible. The project team is also seeking open hardware projects to include with KiCad 7.0 as demos. For example, the StickHub project was included with KiCad 6.0 as a demo.

The official release of KiCad 7.0 is currently scheduled for January 31, 2023. While we wait, let’s flashback to our January 2022 presentation of what features made it into the KiCad 6.0 release.

Continue reading “KiCad 2022 End-of-Year Recap And 7.0 Preview” →