It’ll be Pi Day when this article goes live, at least for approximately half the globe west of the prime meridian. We always enjoy Pi Day, not least for the excuse to enjoy pie and other disc-shaped foods. It’s also cool to ponder the mysteries of a transcendental number, which usually get a good treatment by the math YouTube community. This year was no disappointment in this regard, as we found two good pi-related videos, both by Matt Parker over at Standup Maths. The first one deals with raising pi to the pi to the pi to the pi and how that may or may not result in an integer that’s tens of trillions of digits long. The second and more entertaining video is a collaboration with Steve Mould which aims to estimate the value of pi by measuring the volume of a molecular monolayer of oleic acid floating on water. The process was really interesting and the results were surprisingly accurate; this might make a good exercise to do with kids to show them what pi is all about.

Remember basic physics and first being exposed to the formula for universal gravitation? We sure do, and we remember thinking that it should be possible to calculate the force between us and our classmates. It is, of course, but actually measuring the attractive force would be another thing entirely. But researchers have done just that, using objects substantially smaller than the average high school student: two 2-mm gold balls. The apparatus the Austrian researchers built used 90-milligram gold balls, one stationary and one on a suspended arm. The acceleration between the two moves the suspended ball, which pivots a mirror attached to the arm to deflect a laser beam. That they were able to tease a signal from the background noise of electrostatic, seismic, and hydrodynamic forces is quite a technical feat.

We noticed a lot of interest in the Antikythera mechanism this week, which was apparently caused by the announcement of the first-ever complete computational model of the ancient device’s inner workings. The team from University College London used all the available data gleaned from the 82 known fragments of the mechanism to produce a working model of the mechanism in software. This in turn was used to create some wonderful CGI animations of the mechanism at work — this video is well worth the half-hour it takes to watch. The UCL team says they’re now at work building a replica of the mechanism using modern techniques. One of the team says he has some doubts that ancient construction methods could have resulted in some of the finer pieces of the mechanism, like the concentric axles needed for some parts. We think our friend Clickspring might have something to say about that, as he seems to be doing pretty well building his replica using nothing but tools and methods that were available to the original maker. And by doing so, he managed to discern a previously unknown feature of the mechanism.

We got a tip recently that JOGL, or Just One Giant Lab, is offering microgrants for open-source science projects aimed at tackling the problems of COVID-19. The grants are for 4,000€ and require a minimal application and reporting process. The window for application is closing, though — March 21 is the deadline. If you’ve got an open-source COVID-19 project that could benefit from a cash infusion to bring to fruition, this might be your chance.

And finally, we stumbled across a video highlighting some of the darker aspects of amateur radio, particularly those who go through tremendous expense and effort just to be a pain in the ass. The story centers around the Mt. Diablo repeater, an amateur radio repeater located in California. Apparently someone took offense at the topics of conversation on the machine, and deployed what they called the “Annoy-o-Tron” to express their displeasure. The device consisted of a Baofeng transceiver, a cheap MP3 player loaded with obnoxious content, and a battery. Encased in epoxy resin and concrete inside a plastic ammo can, the jammer lugged the beast up a hill 20 miles (32 km) from the repeater, trained a simple Yagi antenna toward the site, and walked away. It lasted for three days and while the amateurs complained about the misuse of their repeater, they apparently didn’t do a thing about it. The jammer was retrieved six weeks after the fact and hasn’t been heard from since.

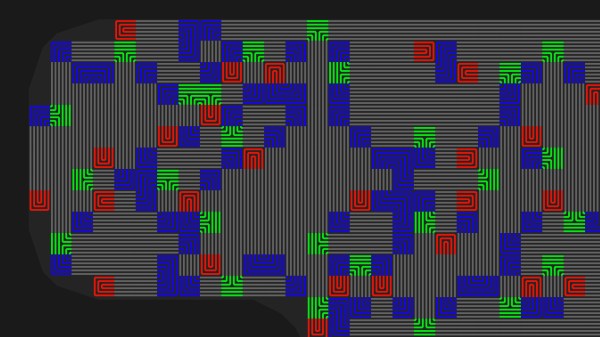

The idea is pretty simple, place traces very close to one another and it makes it impossible to drill into the case of a device without upsetting the apple cart. There are other uses as well, such as embedding them in adhesives that destroy the traces when pried apart. For [Sebastian’s] experiments he’s sticking with PCBs because of the ease of manufacture. His plugin lays down a footprint that has four pads to begin and end two loops in the mesh. The plugin looks for an outline to fence in the area, then uses a space filling curve to generate the path. This proof of concept works, but it sounds like there are some quirks that can crash KiCad. Consider

The idea is pretty simple, place traces very close to one another and it makes it impossible to drill into the case of a device without upsetting the apple cart. There are other uses as well, such as embedding them in adhesives that destroy the traces when pried apart. For [Sebastian’s] experiments he’s sticking with PCBs because of the ease of manufacture. His plugin lays down a footprint that has four pads to begin and end two loops in the mesh. The plugin looks for an outline to fence in the area, then uses a space filling curve to generate the path. This proof of concept works, but it sounds like there are some quirks that can crash KiCad. Consider