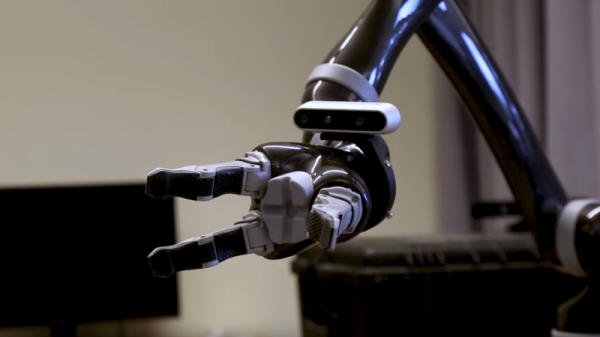

Learning through play is effective for humans of all ages, and since 2016 [slantconcepts] has been designing STEM kits that help teach kids to build their future overlords. They are launching version 3 of their LittleArm robotic arm, and the progression from version 1 is an interesting study in simplification and parts count reduction without sacrificing functionality.

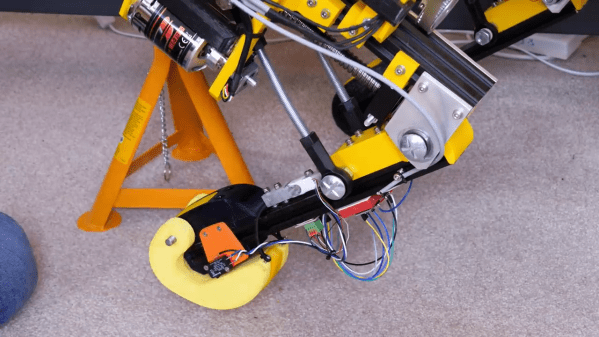

In all of the LittleArm versions the main mechanical components are 3D printed, and driven by 3 servos for motion plus one additional servo to run the gripper. These kits are specifically intended to be built and disassembled repeatedly, and classrooms are a great place for small screws to easily disappear, so reducing the number of screws was a big goal for v3. The gripper/forearm shows the most dramatic improvement from the previous versions, being simplified from 8 separate components to a single 3D printed part by using a compliant mechanism — that squiggly pattern that allows the gripper to flex into place. The gripper tips also feature a simple “cutout” that allow it more easily grasp horizontal objects.

An Arduino Nano based expansion board is used to control the arm, with a HC-06 Bluetooth module to allow it to be controlled via a smart phone app. Various sensors can also be added to expand the kit’s capabilities. Unfortunately the mechanical design is not open source, but it can still be a source of inspiration for your own design projects.

Hopefully this kit will inspire some future hackers to build a more advanced 3D printed version, or even a giant hydraulic powered arm.