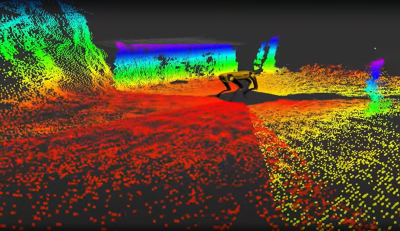

We write a lot about self-driving vehicles here at Hackaday, but it’s fair to say that most of the limelight has fallen upon large and well-known technology companies on the west coast of the USA. It’s worth drawing attention to other parts of the world where just as much research has gone into autonomous transport, and on that note there’s an interesting milestone from Europe. The British company Oxbotica has successfully made the first zero-occupancy on-road journey in Europe, on a public road in Oxford, UK.

The glossy promo video below the break shows the feat as the vehicle with number plates signifying its on-road legality drives round the relatively quiet roads through one of the city’s technology parks, and promises a bright future of local deliveries and urban transport. The vehicle itself is interesting, it’s a platform supplied by the Aussie outfit AppliedEV, an electric spaceframe vehicle that’s designed to provide a versatile platform for autonomous transport. As such, unlike so many of the aforementioned high-profile vehicles, it has no passenger cabin and no on-board driver to take the wheel in a calamity; instead it’s driven by Oxbotica’s technology and has their sensor pylon attached to its centre.

Continue reading “European Roads See First Zero-Occupancy Autonomous Journey”