Humans weren’t made to sit in front of a computer all day, yet for many of us that’s how we spend a large part of our lives. Of course we all know that it’s important to get up and move around every now and then to stretch our muscles and get our blood flowing, but it’s easy to forget if you’re working towards a deadline. [Victor Sonck] thought he needed some reminders — as well as some not-so-gentle nudging — to get into the habit of doing a quick workout a few times a day.

To this end, he designed a piece of software that would lock his computer’s screen and only unlock it if he performed five push-ups. Locking the screen on his Linux box was as easy as sending a command through the network, but recognizing push-ups was a harder task for which [Victor] decided to employ machine learning. A Raspberry Pi with a webcam attached could do the trick, but the limited processing power of the Pi’s CPU might prove insufficient for processing lots of raw image data.

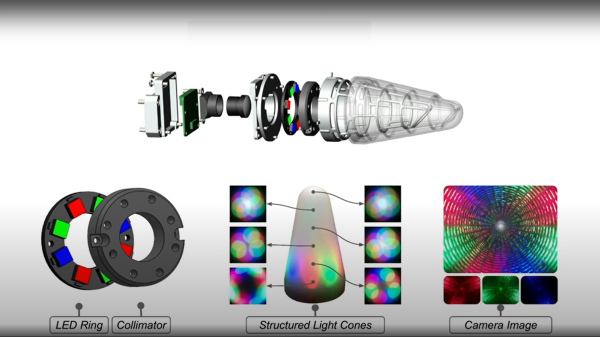

[Victor] therefore decided on using a Luxonis OAK-1, which is a 4K camera with a built-in machine-learning processor. It can run various kinds of image recognition systems including Blazepose, a pre-trained model that can recognize a person’s pose from an image. The OAK-1 uses this to send out a set of coordinates that describe the position of a person’s head, torso and limbs to the Raspberry Pi through a USB interface. A second machine-learning model running on the Pi then analyzes this dataset to recognize push-ups.

[Victor]’s video (embedded below) is an entertaining introduction into the world of machine-learning systems for video processing, as well as a good hands-on example of a project that results in a useful tool. If you’re interested in learning more about machine learning on small platforms, check out this 2020 Remoticon talk on machine learning on microcontrollers, or this 2019 Supercon talk about implementing machine vision on a Raspberry Pi.

Continue reading “Machine Learning Helps You Get In Shape While Working A Desk Job”