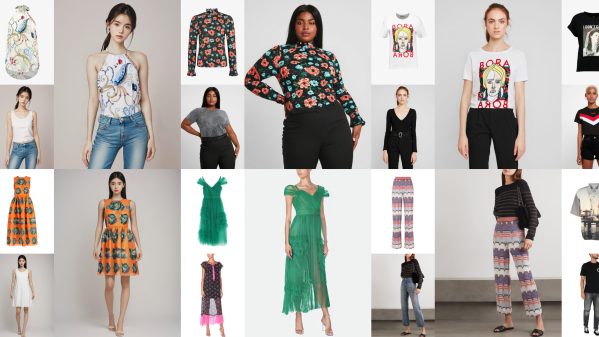

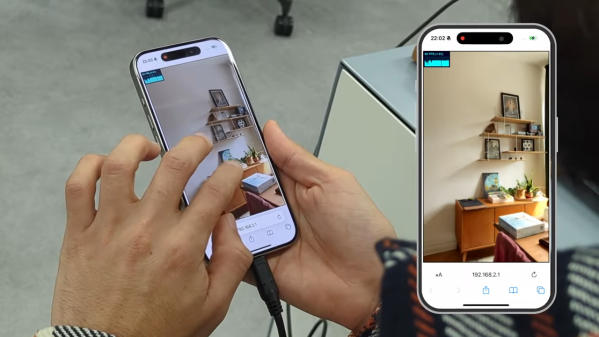

Image generators have really taken off thanks to machine learning, and all kinds of new ideas have been turned on in people’s heads as a result. OOTDiffusion is one such project, its job being to allow virtual try-ons of clothing by combining a picture of a person and an item of clothing, and doing so in a coherent way.

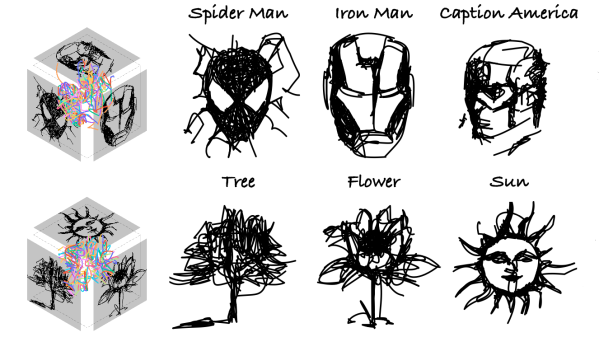

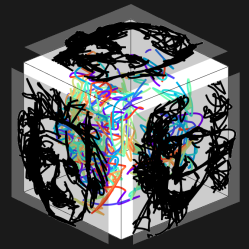

When it comes to AI image generators, maintaining consistency of a particular subject in a picture while changing or combining other parts of the image isn’t a trivial task. (If you’re unfamiliar with the basics of how diffusion-type AI image generators work, we have you covered.)

Virtual try-on of clothing is not a new idea, but it’s also far from being a completely solved problem. It’s easy to feed a system high-quality images of people and clothing and ask it to combine them, but the outputs rarely emerge with all their limbs intact, figuratively speaking.

OOTDiffusion addresses the two big challenges in this area: making sure the outputs look natural and realistic, and preserving as much of the garment’s appearance and qualities as possible in the process.

It seems to to a very good job, and you can try it for yourself in the online demo. Check out the research paper for more details, and the GitHub repository provides all the code if you’d like to get a little more hands-on.