We’ve seen a fair amount of Tesla coil builds, but ones using vacuum tubes are few and far between. Maybe it’s the lack of availability of high power tubes, or a lack of experience working with them among the younger crop of hackers. [Radu Motisan] built a vacuum tube Tesla coil several years back, and only just managed to tip us off recently. Considering it was his first rodeo with vacuum tubes, he seems to have done pretty well — not only did he get good results, he also managed to learn a lot in the process.

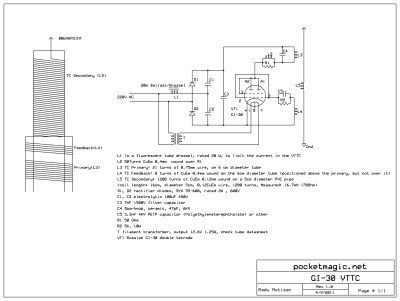

His design is based around a GI-30 medium power dual tetrode. The circuit is a classical Armstrong oscillator with very few parts and ought to be easy to build if you can lay your hands on the tricky parts. The high voltage capacitors may need some scrounging. And of course, one needs to hand-wind the three coils that make up the output transformer.

His design is based around a GI-30 medium power dual tetrode. The circuit is a classical Armstrong oscillator with very few parts and ought to be easy to build if you can lay your hands on the tricky parts. The high voltage capacitors may need some scrounging. And of course, one needs to hand-wind the three coils that make up the output transformer.

Getting the turns ratios of the coils right is quite critical in obtaining proper power transfer to the output. This required a fair amount of trial error before [Radu] could get it right.

The use of a 20W fluorescent tubelight ballast to limit the inrush current is a pretty nice idea to prevent nuisance tripping of the breakers. If you’d like to try making one of your own, head over to his blog post where you will find pictures documenting his build in detail. If you do decide to make one, be extremely careful — this circuit has lethal high voltages in addition to the obvious ones, since it operates directly from 220 V utility supply.