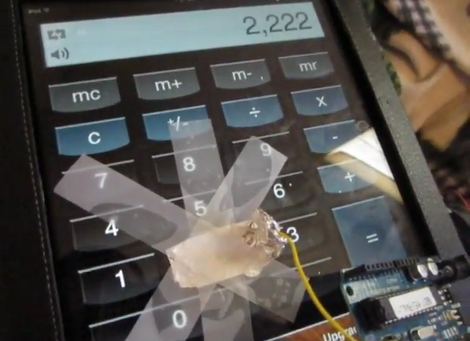

[Jay Kickliter] sent in his latest electronic business card. This time, his goal was to make it much cheaper so he could actually afford to give it away. He did pretty well considering the two week timeline he mentions. This card is using an MSP430 with the capsense library to light up some LEDs any time the card is handled. While he states that it is much cheaper than his last, it is still around $8 a card, so he won’t be tossing these into everyone’s hands. He does point out though that it is always helpful to have hardware to show off at a hardware interview, and an electronic business card does that job very well.

As usual, you can read more details and download the files at his blog.