Although graphical programming languages have been around for ages, they haven’t really seen much use outside of an educational setting. One of the few counterexamples of this is Pure Data, and Max MSP, visual programming languages that make music and video development as easy as dropping a few boxes down and drawing lines between them.

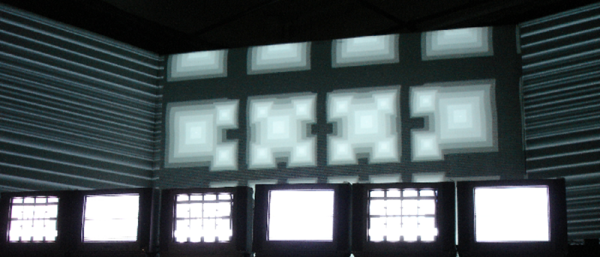

A few years ago, [Thomas] and [Danny] developed a very cool Pure Data audio-visual presentation. The program they developed only generated graphics, but though clever coding they were able to generate a few audio signals from whatever video was coming out of their computer. The project is called TVestroy, and it’s one of the coolest audio-visual presentations you’ll ever see.

The entire program is presented on three large screens and nine CRT televisions. With some extremely clever code and a black box of electronics, the video becomes the audio. Check it out below.

Although this is a relatively old build, [Thomas] thought it would be a good idea to revisit the project now. He’s open sourced most of the Pure Data files, and everything can be downloaded on the project page.

Continue reading “Video From Audio And Pure Data” →

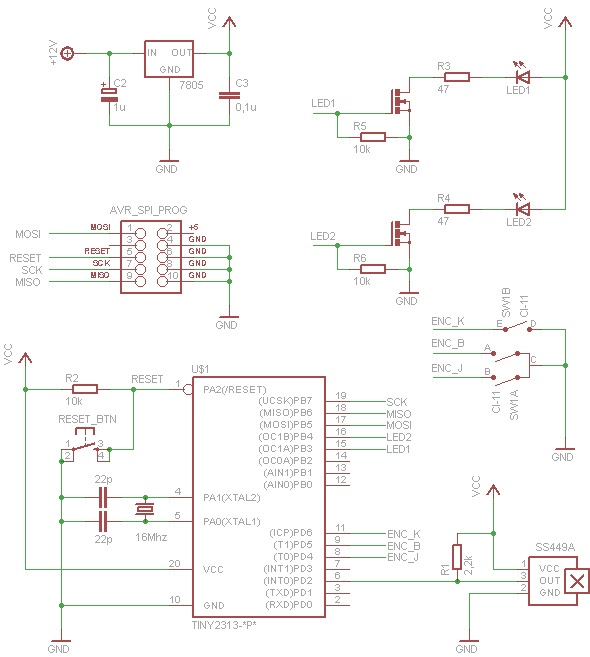

He used the frame, disk and motor from a drive and added LEDs under the spinning disk as the light source. The disk has 8 small holes drilled equidistant around the disk, and spiraling slightly toward the center. As the holes pass by the LEDS they are flashed by the ATtiny2313 processor to create images. To determine the position of the platters a Hall effect sensor is monitored by the 2313 to detect a magnet on the underside of the disk. There is room to display ten characters at one time. Each cursor position can scroll through the character set by rotating an encoder. For all the precision needed to coordinate the LEDs with the spinning holes the electronics and software code are amazingly simple. That’s a really nice job, [Adam]!

He used the frame, disk and motor from a drive and added LEDs under the spinning disk as the light source. The disk has 8 small holes drilled equidistant around the disk, and spiraling slightly toward the center. As the holes pass by the LEDS they are flashed by the ATtiny2313 processor to create images. To determine the position of the platters a Hall effect sensor is monitored by the 2313 to detect a magnet on the underside of the disk. There is room to display ten characters at one time. Each cursor position can scroll through the character set by rotating an encoder. For all the precision needed to coordinate the LEDs with the spinning holes the electronics and software code are amazingly simple. That’s a really nice job, [Adam]!

Video arcades may be a thing of the past, but they’re still alive, well and were ready to play at this year’s World Maker Faire. The offerings weren’t old favorites, all were brand new games many being shown for the first time like the long-awaited VEC9. The Hall of Science building was filled with cabinets and no quarters were necessary, all were free-play.

Video arcades may be a thing of the past, but they’re still alive, well and were ready to play at this year’s World Maker Faire. The offerings weren’t old favorites, all were brand new games many being shown for the first time like the long-awaited VEC9. The Hall of Science building was filled with cabinets and no quarters were necessary, all were free-play.