On November 6th, Northwestern University introduced a groundbreaking leap in haptic technology, and it’s worth every bit of attention now, even two weeks later. Full details are in their original article. This innovation brings tactile feedback into the future with a hexagonal matrix of 19 mini actuators embedded in a flexible silicone mesh. It’s the stuff of dreams for hackers and tinkerers looking for the next big thing in wearables.

What makes this patch truly cutting-edge? First, it offers multi-dimensional feedback: pressure, vibration, and twisting sensations—imagine a wearable that can nudge or twist your skin instead of just buzzing. Unlike the simple, one-note “buzzers” of old devices, this setup adds depth and realism to interactions. For those in the VR community or anyone keen on building sensory experiences, this is a game changer.

But the real kicker is its energy management. The patch incorporates a ‘bistable’ mechanism, meaning it stays in two stable positions without continuous power, saving energy by recycling elastic energy stored in the skin. Think of it like a rubber band that snaps back and releases stored energy during operation. The result? Longer battery life and efficient power usage—perfect for tinkering with extended use cases.

And it’s not all fun and games (though VR fans should rejoice). This patch turns sensory substitution into practical tech for the visually impaired, using LiDAR data and Bluetooth to transmit surroundings into tactile feedback. It’s like a white cane but integrated with data-rich, spatial awareness feedback—a boost for accessibility.

Fancy more stories like this? Earlier this year, we wrote about these lightweight haptic gloves—for those who notice, featuring a similar hexagonal array of 19 sensors—a pattern for success? You can read the original article on TechXplore here.

![[miko_tarik] wearing diy AR goggles in futuristic setting](https://hackaday.com/wp-content/uploads/2024/11/diy-ar-goggles-1200.jpg?w=600&h=450)

![[miko_tarik] wearing diy AR goggles](https://hackaday.com/wp-content/uploads/2024/11/diy-ar-goggles-smallphoto.jpg?w=400) Creating Zero wasn’t simple. From designing the frame in Tinkercad to experimenting with transparent PETG to print lenses (ultimately switching to resin-cast lenses), [mi_kotalik] faced plenty of challenges. By customizing SPI displays and optimizing them to 60 FPS, he achieved an impressive level of real-time responsiveness, allowing him to explore AR interactions like never before. While the Raspberry Pi Zero’s power is limited, [mi_kotalik] is already planning a V2 with a Compute Module 4 to enable 3D rendering, GPS, and spatial tracking.

Creating Zero wasn’t simple. From designing the frame in Tinkercad to experimenting with transparent PETG to print lenses (ultimately switching to resin-cast lenses), [mi_kotalik] faced plenty of challenges. By customizing SPI displays and optimizing them to 60 FPS, he achieved an impressive level of real-time responsiveness, allowing him to explore AR interactions like never before. While the Raspberry Pi Zero’s power is limited, [mi_kotalik] is already planning a V2 with a Compute Module 4 to enable 3D rendering, GPS, and spatial tracking.

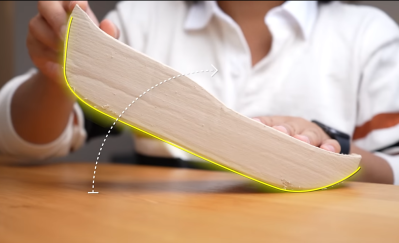

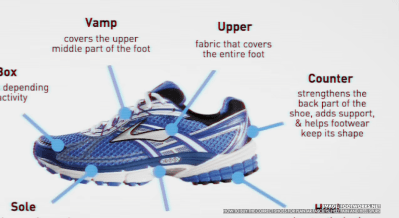

process, given that there is indeed a fair amount of science to shoe design. Firstly, after a quick run, the main issues with some existing shoes were identified, specifically that there are a lot of pain points; feet hurt from all the impacts, and knees take a real pounding, too. That meant they needed to increase the sole cushioning. They felt that too much energy was wasted with the shoes not promoting forward motion as much as possible; feet tended to bounce upwards so that a rocker sole shape would help. Finally, laces and other upper sole features cause distraction and some comfort issues, so those can be deleted.

process, given that there is indeed a fair amount of science to shoe design. Firstly, after a quick run, the main issues with some existing shoes were identified, specifically that there are a lot of pain points; feet hurt from all the impacts, and knees take a real pounding, too. That meant they needed to increase the sole cushioning. They felt that too much energy was wasted with the shoes not promoting forward motion as much as possible; feet tended to bounce upwards so that a rocker sole shape would help. Finally, laces and other upper sole features cause distraction and some comfort issues, so those can be deleted.