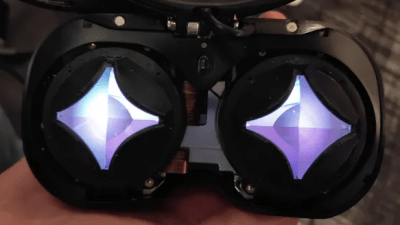

Some readers may recall the Lynx-R1 headset — it was conceived as an Android virtual reality (VR) and mixed reality (MR) headset with built-in hand tracking, designed to be open where others were closed, allowing developers and users access to inner workings in defiance of walled gardens. It looked very promising, with features rivaling (or surpassing) those of its contemporaries.

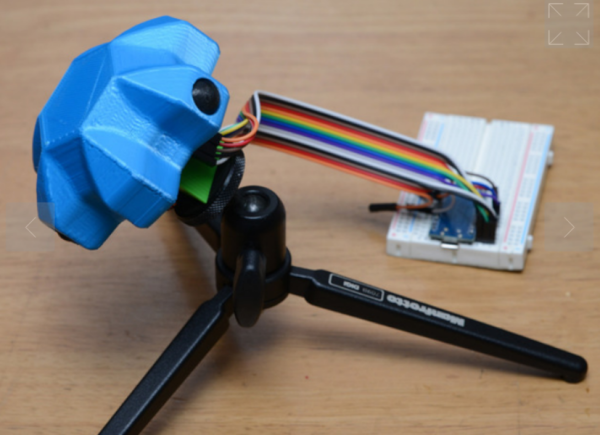

Founder [Stan Larroque] recently announced that Lynx’s 6DoF SLAM (simultaneous location and mapping) solution has been released as open source. ORB-SLAM3, modified for Android-based hardware (GitHub repository), takes in camera images and outputs a 6DoF pose, and does so effectively in real-time. The repository contains some added details as well as a demo application that can run on the Lynx-R1 headset.

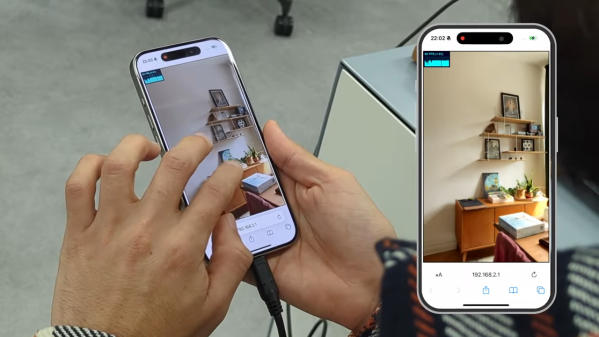

As a headset the Lynx-R1 had a number of intriguing elements. The unusual optics, the flip-up design, and built-in hand tracking were impressive for its time, as was the high-quality mixed reality pass-through. That last feature refers to the headset using its external cameras as inputs to let the user see the real world, but with the ability to have virtual elements displayed and apparently anchored to real-world locations. Doing this depends heavily on the headset being able to track its position in the real world with both high accuracy and low latency, and this is what ORB-SLAM3 provides.

A successful crowdfunding campaign for the Lynx-R1 in 2021 showed that a significant number of people were on board with what Lynx was offering, but developing brand new consumer hardware is a challenging road for many reasons unrelated to developing the actual thing. There was a hands-on at a trade show in 2021 and units were originally intended to ship out in 2022, but sadly that didn’t happen. Units still occasionally trickle out to backers and pre-orders according to the unofficial Discord, but it’s safe to say things didn’t really go as planned for the R1.

It remains a genuinely noteworthy piece of hardware, especially considering it was not a product of one of the tech giants. If we manage to get our hands on one of them, we’ll certainly give you a good look at it.