You can get all kinds of great wildlife footage if you trek out into the woods with a camera, but it can be tough to stay awake all night. However, this is a task you can readily automate, as [Luke] did with his DIY trail camera.

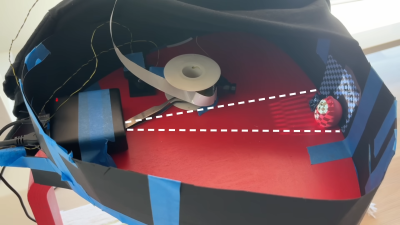

A Raspberry Pi Zero 2W serves as the heart of the build. It’s compact and runs on very little power, but also provides a good amount more processing power than the original Raspberry Pi Zero. It’s kitted out with the Raspberry Pi AI Camera, which uses the Sony IMX500 Intelligent Vision Sensor — providing a great platform for neural networks doing image classification and similar machine learning tasks. A Witty Pi power management module is used both for its real time clock and to schedule start-ups and shutdowns to best manage the power on offer from the batteries. All these components are wrapped up in a 3D printed housing to keep the Pi safe out in the wild.

We’ve seen some neat projects in this vein before.